What is the environmental impact of video game graphics?

According to a study by Statista, the video game sector will generate over US$155 billion in revenues worldwide by 2021. This figure can be explained by the increase in the number of gaming platforms and the diversification of the types of games available to consumers, as well as by the democratization of the industry thanks to the emergence of free-to-play games. By 2022, video games will have attracted almost 1.8 billion players across the globe, transforming the entertainment experience into a social dimension and fostering the emergence of new sectors such as streaming and esports.

However, all these games, albeit virtual, are run on physical hardware, and therefore consume energy. This article presents and compares the energy consumption of different video games and their parameters. To find out how much energy these uses actually consume, we have chosen to evaluate the following video games: Assassin’s Creed Valhalla, Total War Warhammer III, Borderlands 3, Anno 1800 and War Thunder.

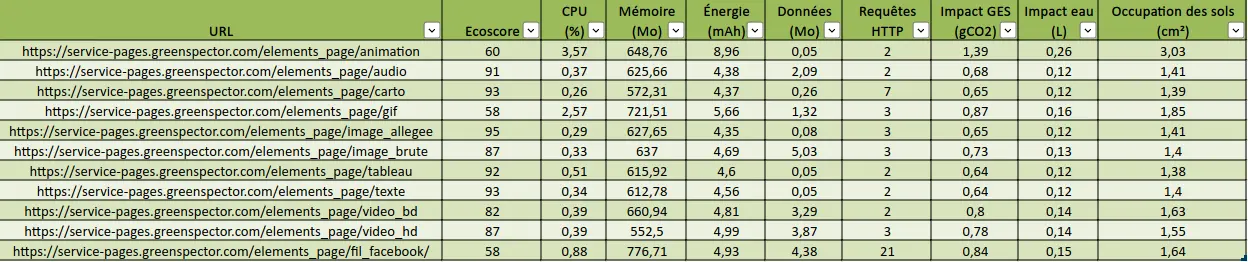

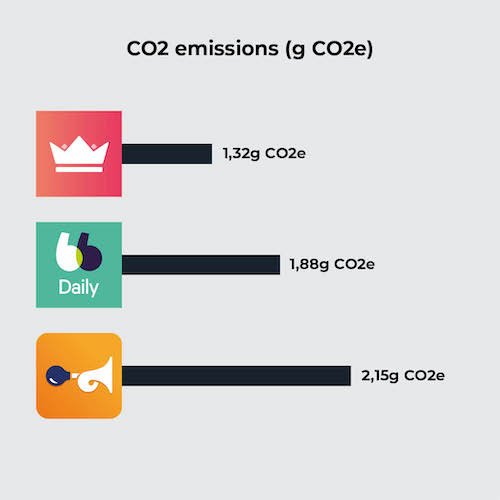

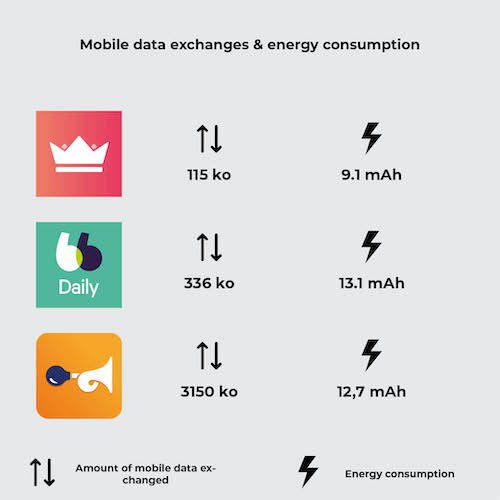

We have previously carried out a study on mobile games.

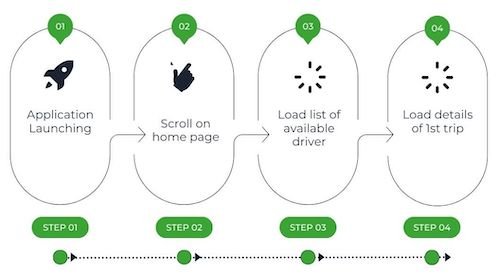

Selection and methodology

These video games were selected because they offer a benchmark. Using these benchmarks as measurement subjects ensures the replicability of our experimental protocol, while eliminating the human factor from the results.

A benchmark is a feature offered by the game that allows you to measure the performance of a system (entire PC), or one of its components (CPU, GPU, memory, etc.) according to a given scenario and selected parameters.

We’ve also taken care to represent several types of game mode, such as RPG (role-playing game), strategy or simulation.

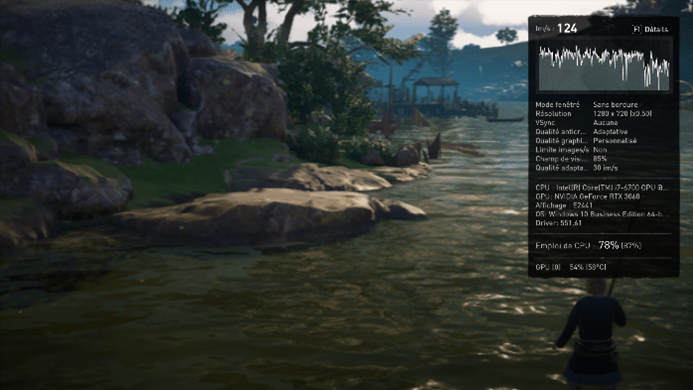

We measured these video games on a PC with the following configuration:

- Processor: i7 6700

- Memory: 32 Go RAM DDR4

- Graphics card: RTX 3060 12Go

This equipment was supplied to us by OPP!, a company offering PC and Mac repair and maintenance services, as well as individual component sales.

The screen used is an LG E2441 with the following specifications:

- Screen technology : LED

- Screen Size : 24”

- Resolution : 1920×1080

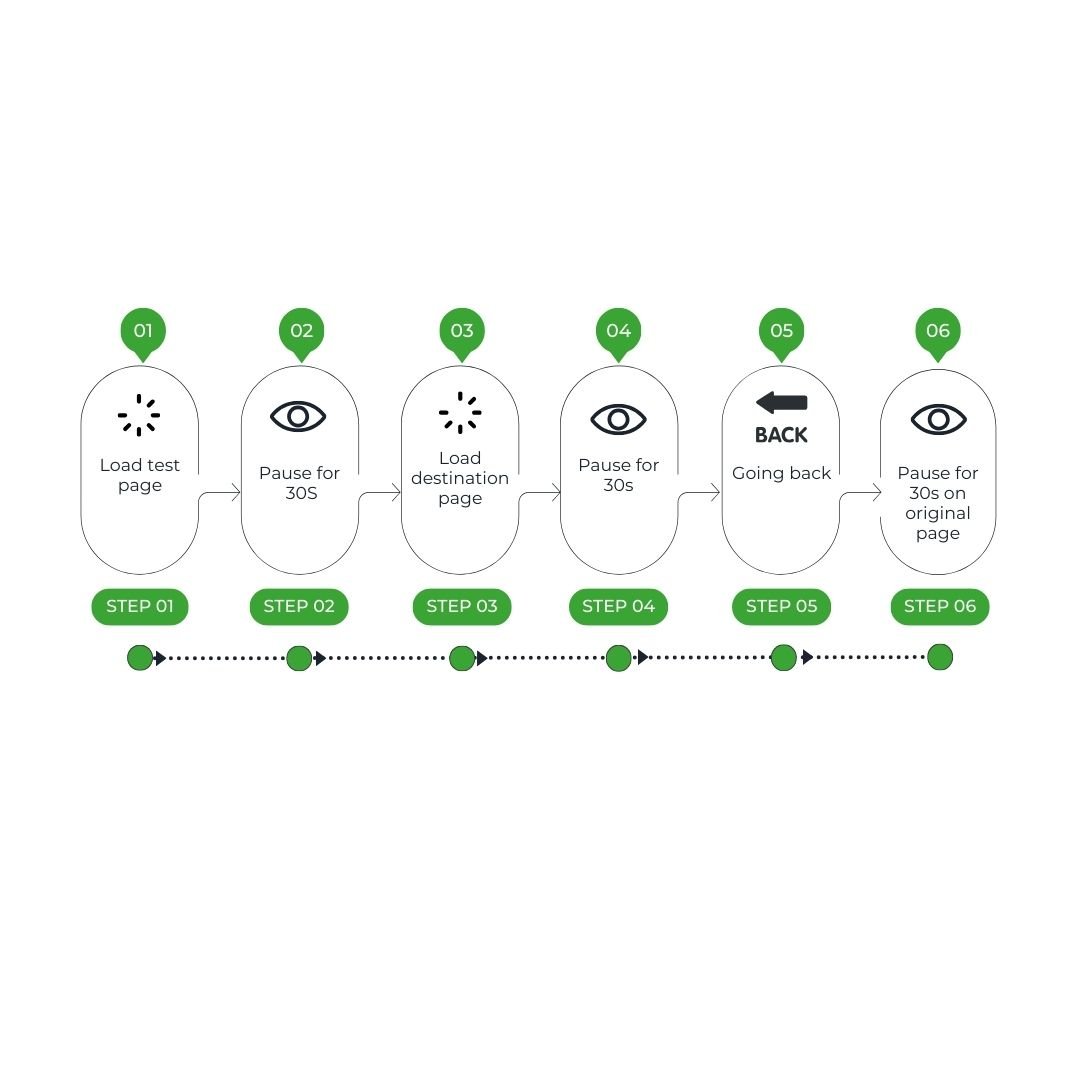

We collected energy metrics using a measurement module connected to our Greenspector Studio software, plugged directly into the PC and monitor power supplies and connected to the mains socket.

Benchmarks were carried out in 2 different graphics configurations:

- A configuration with maximum settings for the graphics offered by the game

- A configuration with minimal settings for the graphics offered by the game

6 iterations were performed on each scenario to ensure reliable results.

Benchmarks last between 80 and 240 seconds. These variations do not affect the results presented.

Graphic evolution impacts power

Modern games incorporate higher-quality graphics with ultra-detailed textures, advanced visual effects such as dynamic lighting, real-time shadows and sophisticated particle effects. This graphical complexity requires considerable rendering and graphics processing capabilities.

Gamers are also increasingly opting for high display resolutions for an optimal visual experience. This puts extra pressure on the GPU (Graphics Processing Unit) to render detailed images at ultra-high resolutions.

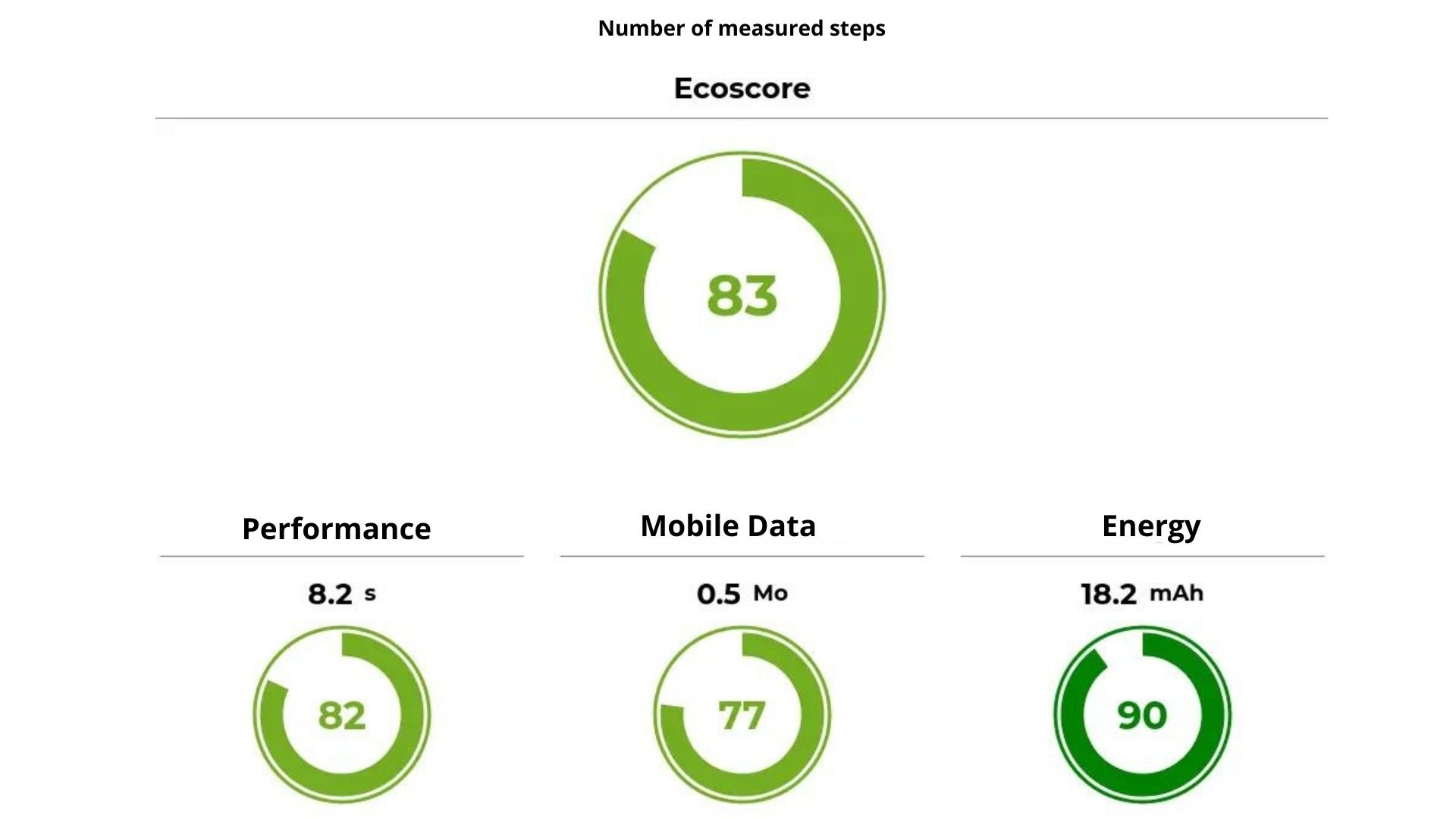

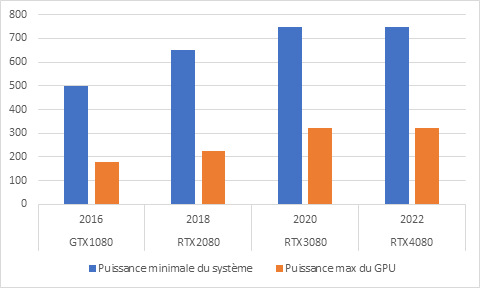

These GPUs have increasing energy consumption with each new generation, as shown below for NVDIA:

Evolution of minimum system power and maximum GPU power by GPU release date

Developers exploit advanced rendering techniques such as ray tracing to realistically simulate the behavior of light in virtual environments. Although these techniques offer an unprecedented level of realism, they are computationally intensive and require high-end GPUs.

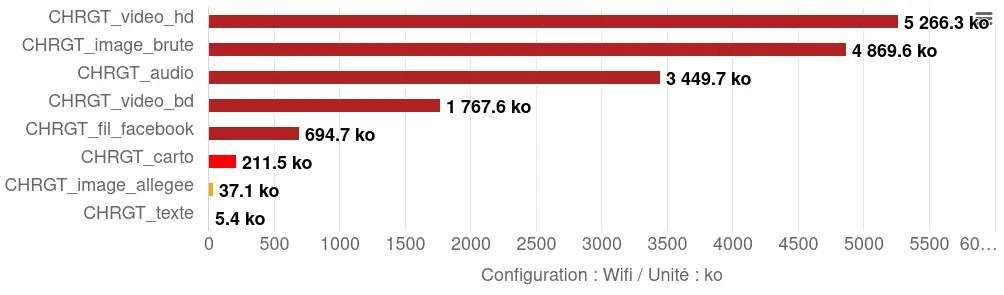

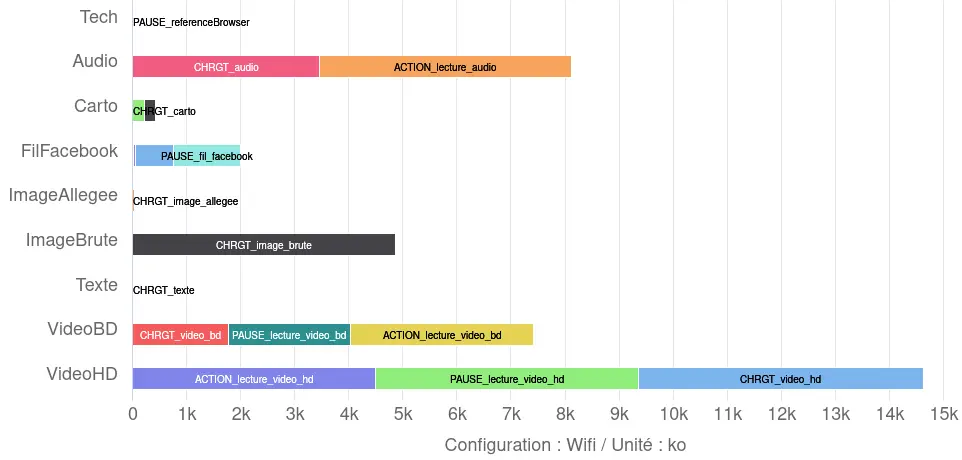

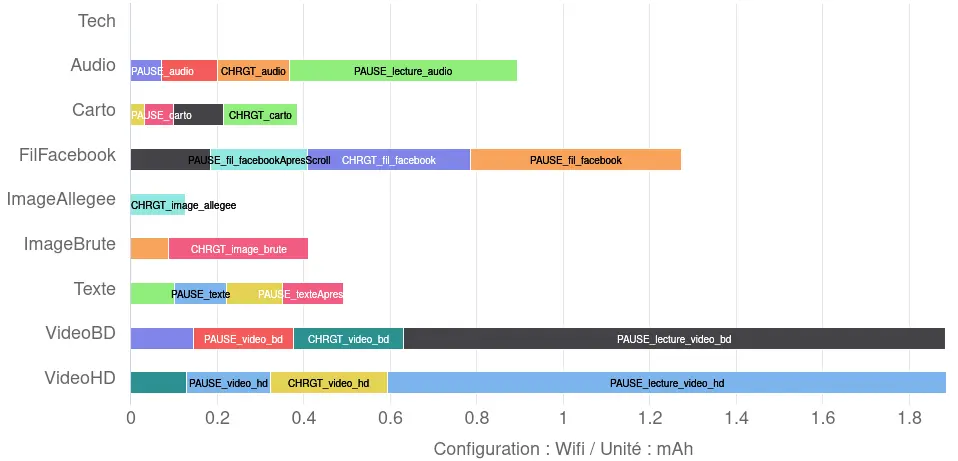

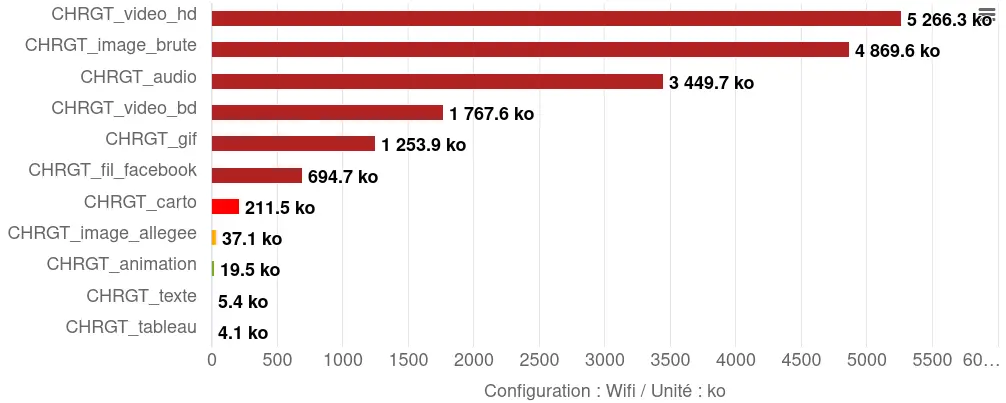

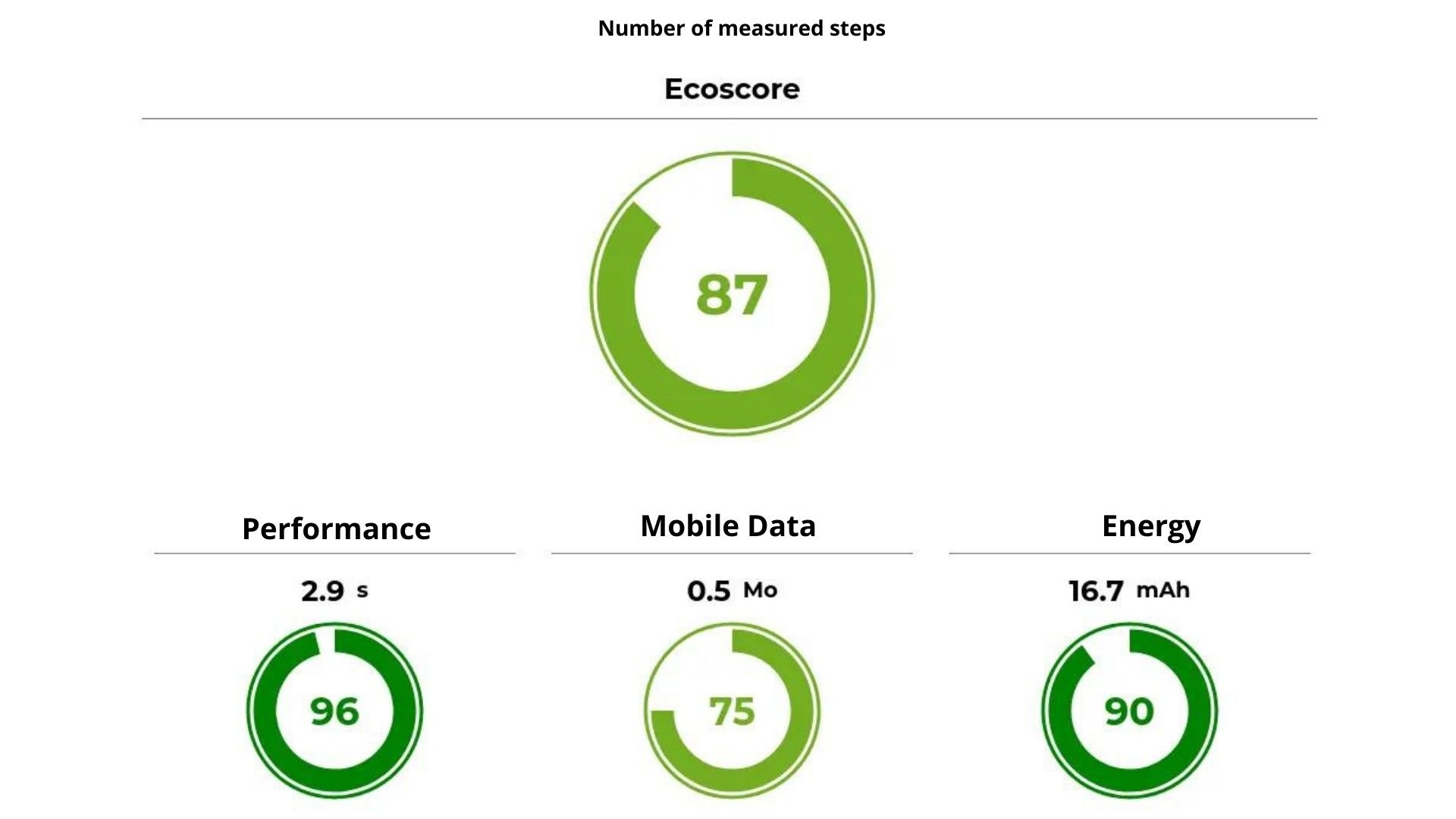

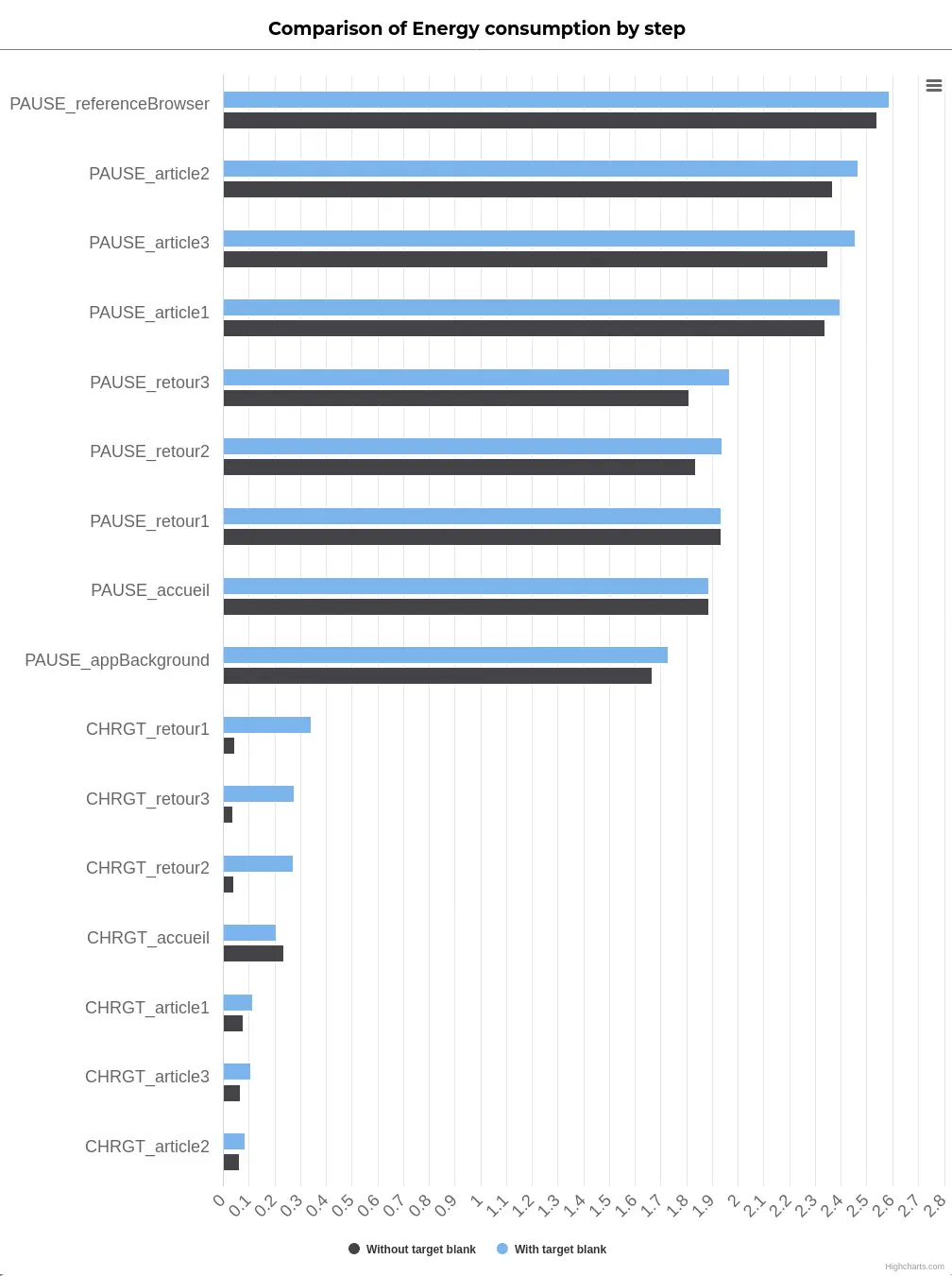

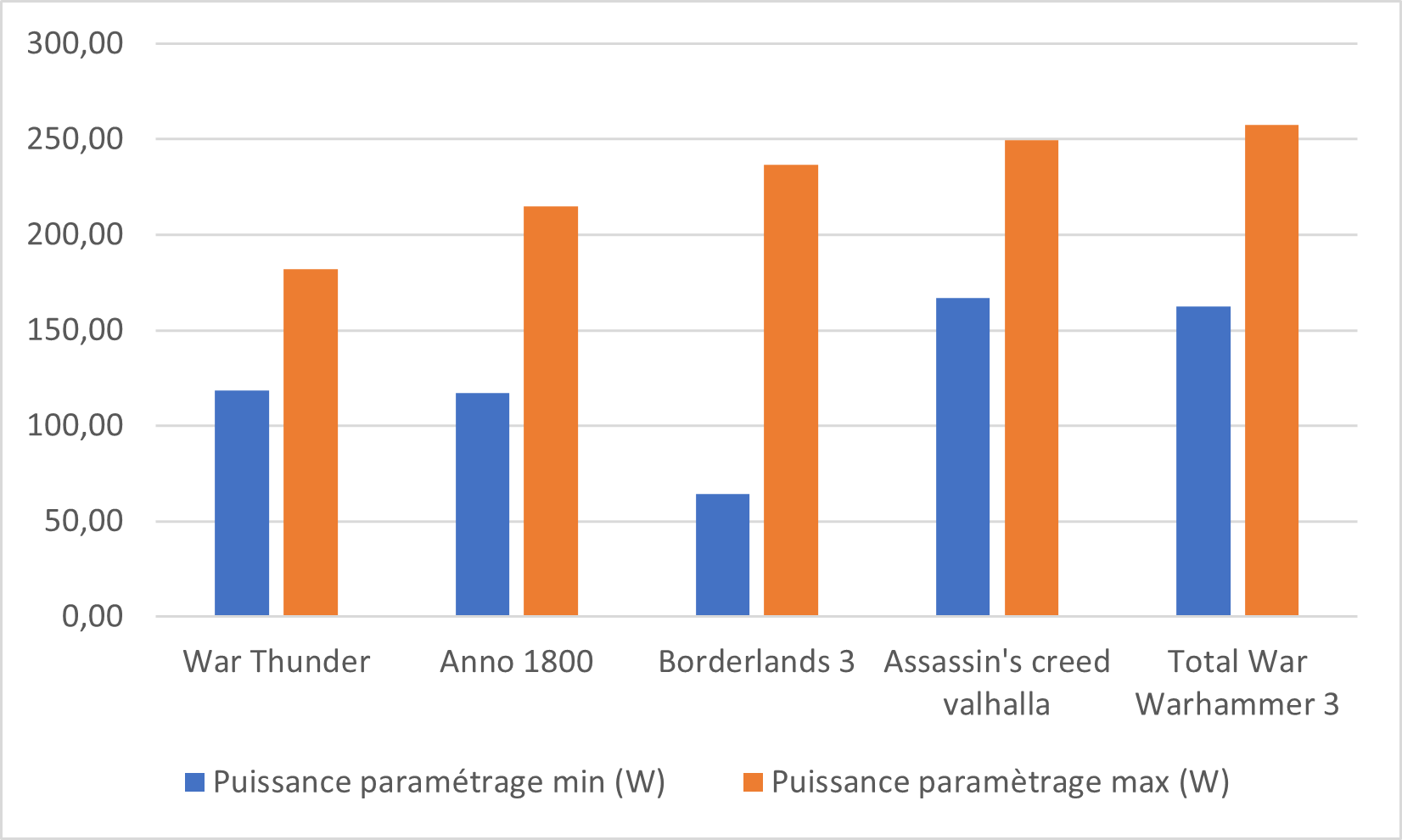

Consumption differences depending on settings

Measurements of average PC power on the lowest and highest graphics configurations for each game show a wide disparity between them.

Total PC power at minimum or maximum setting

Switching from the maximum settings to the lowest settings offered by each game results in a measured power reduction of 45% on average. In the case of Borderlands 3, a power gain of 72% can even be observed.

In Anno 1800, the benchmark is a panoramic aerial view of the game’s map. This sequence highlights details of the game world, such as landscapes, iconic buildings and animations of everyday life.

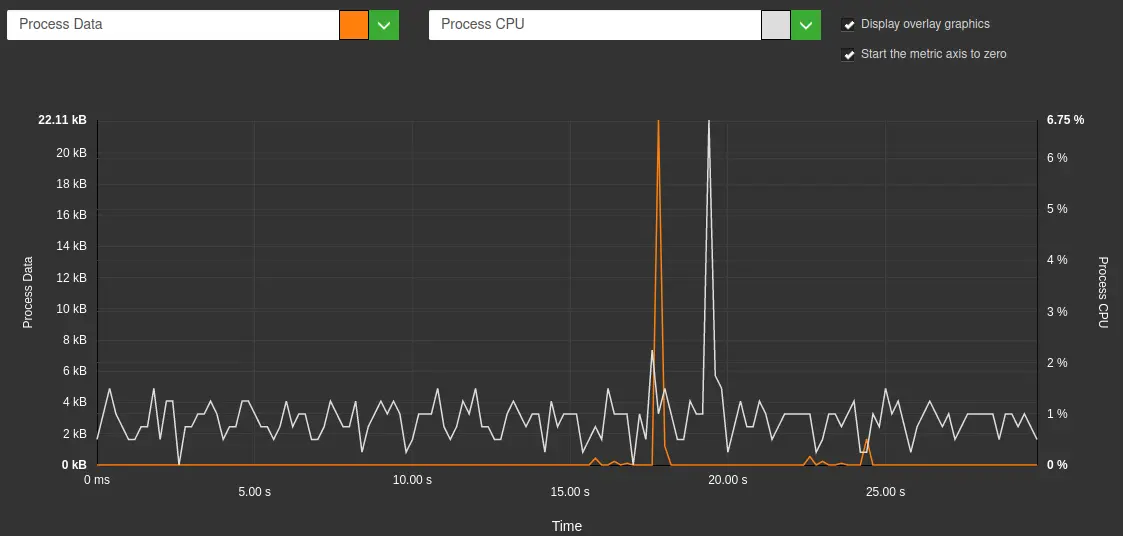

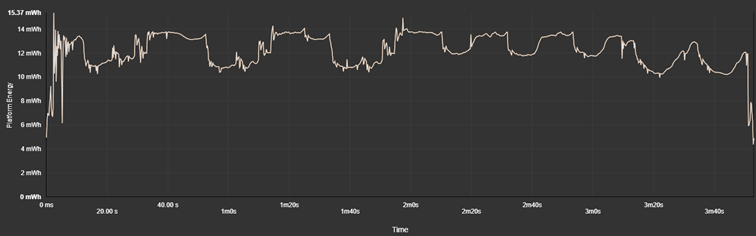

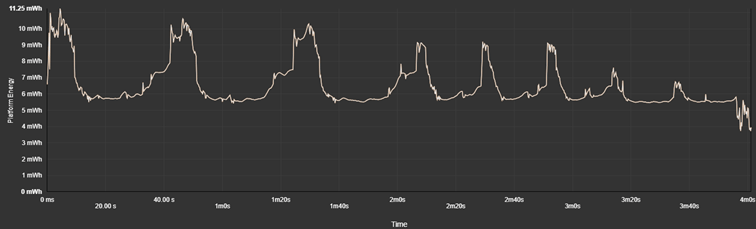

Below are graphs of one iteration measured with maximum parameterization and another iteration with minimum parameterization. The benchmark sweeps over the city from its zoomed-in aerial viewpoint at the start, then the same trajectory is repeated 8 times with increasingly higher viewpoints, which explains the 8 peaks on the graph.

Here, we can easily see the significant difference between the 2 parameter levels. On the two different settings, we can see first of all that the further the camera is from the city, the more the power is reduced, given the increasingly short time of the scenario.

What’s more, when the game is set to maximum, power consumption is at its peak for almost the entire duration of the scenario, whereas measurements taken with the lowest setting show lower and shorter power peaks.

Anno 1800 benchmark power consumption with maximum settings

Anno 1800 benchmark power consumption with minimum settings

A Statista survey conducted in December 2023 revealed that 22% of US adults aged 18 to 29 spent six to ten hours a week playing video games. Overall, respondents in this age group were also more likely than others to be avid gamers, as a total of 8% played video games for more than 20 hours a week on average.

These figures enable us to evaluate overall energy consumption over the usage times of different types of players, in the case where the benchmark is representative of game consumption. Energy consumption has been projected with measurements taken on the minimum and maximum settings of each game.

The average consumption for one hour of play at minimum settings is 0.168 kWh, and 0.254 kWh at maximum settings. These results are higher than those of the European study on the environmental impact of digital services. The latter shows a consumption of 0.137 kWh for one hour of PC gaming at medium resolution.

| Energy consumption over 6 hours of play (Wh) | Energy consumption over 10h of play (Wh) | Energy consumption over 20h of play (Wh) | ||||

| Settings | Min | Max | Min | Max | Min | Max |

| War Thunder | 1469,70 | 1460,78 | 2449,50 | 2434,64 | 4899,00 | 4869,28 |

| Anno 1800 | 843,26 | 1352,27 | 1405,43 | 2253,78 | 2810,86 | 4507,56 |

| Borderlands | 522,33 | 1537,53 | 870,55 | 2562,55 | 1741,09 | 5125,09 |

| Assassin’s Creed Valhalla | 1110,49 | 1618,73 | 1850,82 | 2697,88 | 3701,65 | 5395,76 |

| Total War Warhammer III | 1108,08 | 1651,01 | 1846,80 | 2751,68 | 3693,60 | 5503,37 |

Most gamers therefore consume between 1.5 kWh and 2.5 kWh per week, playing between 6 and 10 hours a week. For more involved gamers playing around 20h a week (2h40 a day), their PC and screen have a weekly consumption of 5 kWh. By the same token, a conventional refrigerator consumes an average of 3.29 kWh per week.

Evolution by release date

On maximum configurations, we note an evolution in measured power proportional to the release date of these games.

| Games | Game release | Power at maximum setting (W) |

| War Thunder | November 2012 | 181,86 |

| Anno 1800 | April 2019 | 214,94 |

| Borderlands 3 | September 2019 | 236,62 |

| Assassin’s Creed Valhalla | November 2020 | 249,46 |

| Total War Warhammer III | February 2022 | 257,70 |

In this context, the maximum configurations of video games reflect this technological evolution. Game developers design their games to take advantage of the latest hardware advances, and this translates into increasingly high demands on components. As a result, to take full advantage of graphics performance and game fluidity, gamers often have to invest in state-of-the-art hardware.

These complex, detailed graphics require real-time rendering, which often relies on the CPU to perform calculations related to physics, artificial intelligence of non-player characters, collision management and other aspects of gameplay.

This is what a technical director of the Total War game explains in an interview with Intel:

“We model thousands of soldiers with a high level of detail applied to each in terms of animations, interactions, pathfinding decisions, etc.”

In video games, pathfinding consists in figuring out how to move a character from point A to point B, taking into account the environment: obstacles, other characters, length of paths, etc.

What’s more, the processor is often juggling numerous tasks simultaneously, depending on what’s displayed on the screen. “Take a scene where two huge fronts with thousands of soldiers are smashing into each other, and you’ve zoomed in quite close,” explains the game’s technical director. “In this situation, the processor is divided mainly between entity agent-based combat, collision mechanisms and building matrix stacks to draw all the entities.”

In other words, the processor must simultaneously manage the presence and interactions of thousands of NPCs (non-player characters).

What’s more, the more advanced the graphics, the greater the demand on the GPU to process data and instructions efficiently, which can lead to bottlenecks and slowdowns if the processor isn’t powerful enough.

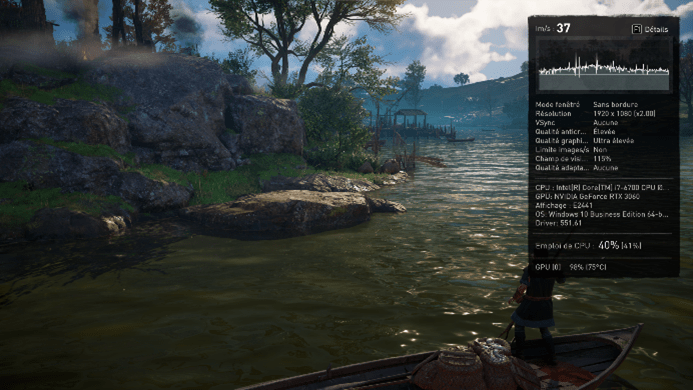

In the Assassin’s Creed Valhalla game, when the settings are at their lowest, the graphics card is called upon for 46% on average. Conversely, at maximum setting, with water reflections activated or cloud quality at maximum, the graphics card is used at 99% during the benchmark.

Optimization vs. graphic quality

We’ve just seen that setting a game to its maximum setting involves high energy consumption. But are the visual effects enhanced? Are all settings relevant to the gaming experience, depending on the PC configuration?

An interesting indicator to answer these questions is the number of frames per second (FPS), as it is often used as an indicator of a game’s fluidity: the higher the FPS, the more fluid and responsive the game appears.

FPS (Frame Per Second) indicates the number of individual images (or “frames”) displayed on the screen each second.

The more complex an image is to generate and display, the slower the processor and graphics card can display it. So, when the settings exceed the capabilities of the PC configuration, the visual effect for the player is not necessarily enhanced.

What’s more, gameplay can be impacted by the bottleneck phenomenon.

A bottleneck is a phenomenon produced by a hardware or software component with limited performance compared to other, more powerful components. This means that one part of the system is operating at maximum capacity, while other parts can’t keep up, resulting in a drop in overall performance.

By correctly balancing the hardware configuration and adjusting graphics settings accordingly, gamers can minimize the risk of slowdowns and jerks, delivering a more enjoyable and immersive gaming experience.

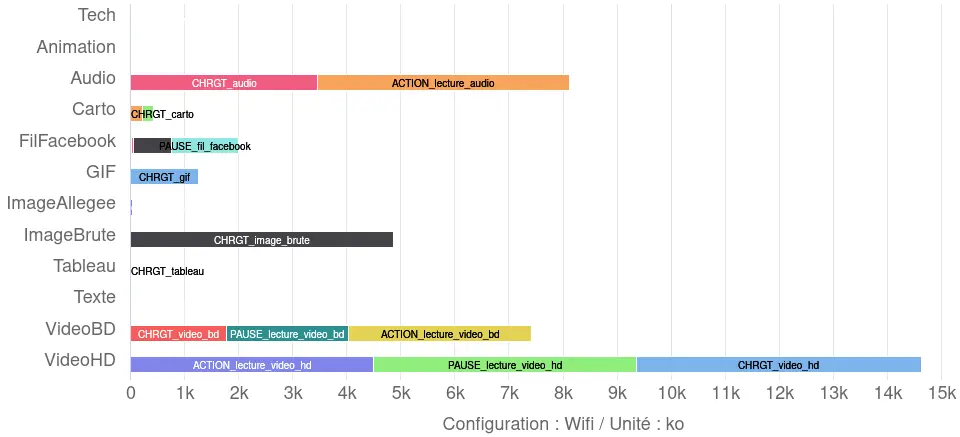

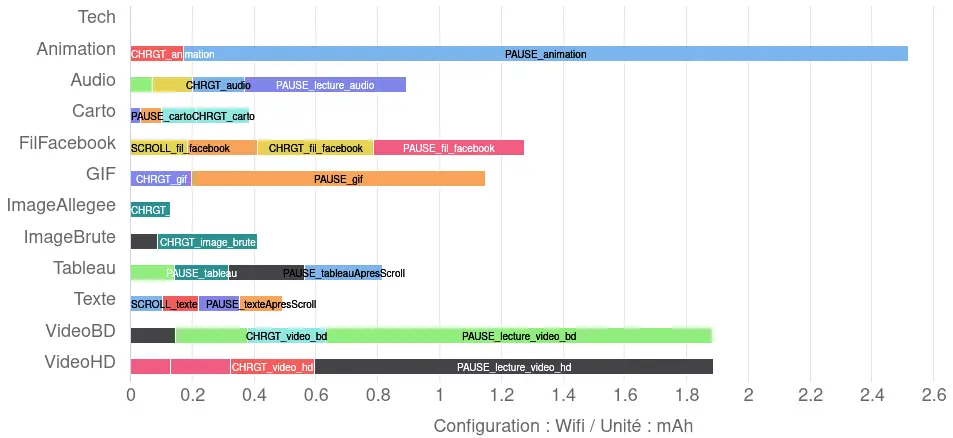

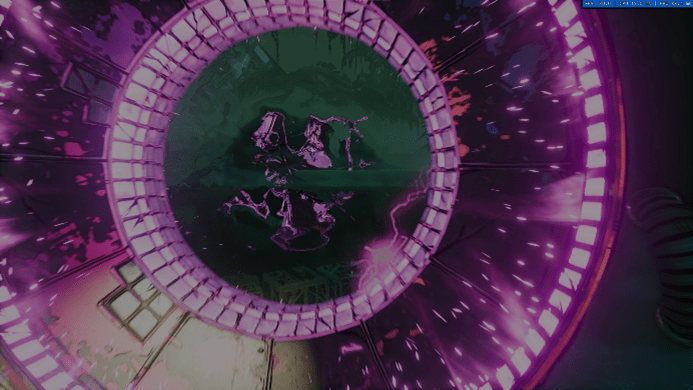

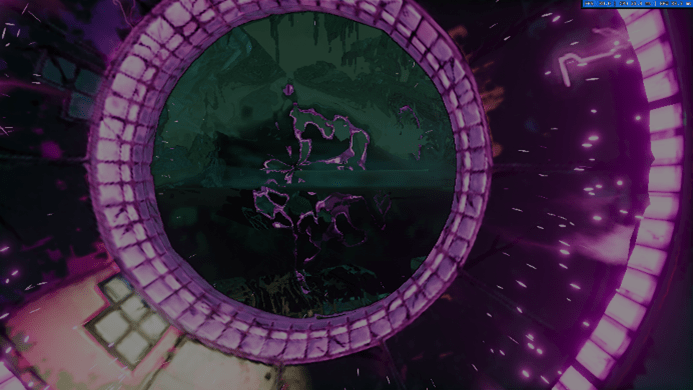

Here are some differences from benchmarks set to their maximum gaming parameters and then to their minimum:

Implications for materials and environmental impact

The constant evolution of video games towards ever more immersive and realistic experiences has significant implications for the hardware used. Game developers are seeking to fully exploit the graphics and processing capabilities of new technologies, which translates into higher hardware requirements.

In France, 2020 will see the sale of 2.3 million consoles, 27.5 million complete games (console + PC / physical + dematerialized) and almost 7 million accessories (console + PC). With growth of 10%, the console ecosystem represents 51% of the total video game market, while PC gaming is up 9%. (Source: Sell)

Gamers are looking to stay at the cutting edge of technology to take full advantage of the latest releases. Beyond the financial stakes this may represent, this quest for hardware performance is also very critical from an environmental point of view.

As we’ve seen, all the components in a configuration need to be at roughly the same level of performance for an optimized gaming experience. If the gamer has a high-performance graphics card but a lower-resolution screen, or a less powerful processor or motherboard, the gaming experience will not necessarily be enhanced or even altered. So, from an optimization point of view, it’s not so much a matter of buying the latest components to improve performance as it is of optimizing game parameters according to your hardware configuration. On the one hand, this extends their life expectancy through reduced stress, but it also improves the gaming experience for users.

Over-solicitation causes components such as the graphics card or processor to heat up to high temperatures due to the amount of calculations they handle, and damages their transistors and chips, thus shortening their lifespan.

According to HP, the lifespan of an average desktop PC is between 2 and 3 years, and that of a gamer PC between 3 and 5 years.

We have no information about the environmental impact of gamer PC manufacturing, but the frequency of release of the latest generation of products, which pushes gamers to renew their PC configuration every year, considerably increases the impact of this industry.

It’s worth noting that game consoles also have a significant carbon footprint.

Climate and sustainability researcher Ben Abraham analyzes the PlayStation 4’s central processing unit using mass spectrometry, revealing the presence of atomic components such as titanium, whose extraction, refining and manufacture contribute to greenhouse gas emissions.

This observation underlines the challenge of making the production of these devices sustainable, with decades needed to achieve this goal.

The importance of measurement

Video game publishers play a crucial role in reducing the industry’s environmental footprint. To do so, it is imperative to take energy consumption into account throughout the game development process.

First and foremost, measuring energy consumption enables game publishers to understand the environmental impact of their products. This includes not only the direct energy consumption of the devices on which the games are run, but also the carbon footprint associated with game servers, updates and downloads.

Secondly, this awareness enables game developers to design game mechanics and graphics that optimize energy efficiency. For example, by minimizing complex visual effects that require high computing power, games can reduce their energy consumption while still offering an immersive gaming experience.

The subject of the environmental footprint of video games is increasingly being taken on board by publishers, which is encouraging. Initiatives such as Ukie’s Green Games Guide and Ecran d’après offer practical advice and best practices for reducing the environmental impact of game design and development. Similarly, tools such as Microsoft’s Xbox Sustainability Toolkit or Jyros, the environmental impact measurement tool dedicated to the video game industry in France, provide developers with concrete ways of assessing and improving the sustainability of their games.

However, it is important to generalize these practices and integrate them more systematically throughout the industry. Too often, the environmental aspect is relegated to the background, while the emphasis is placed on the performance and aesthetics of games. It is therefore essential that publishers take greater account of the environmental implications of their design and development decisions.

Study limits

In the context of this study, it is important to recognize certain limitations that could affect the scope and representativeness of the results obtained:

- Manufacturer/designer partnerships: It is possible that some video games have established partnerships with hardware manufacturers to optimize their performance on specific configurations. These agreements could distort benchmark results by favoring certain brands or models of components. These results may alter comparisons between games, but not comparisons between configurations within the same game.

- Benchmark scenario not necessarily representative of game modes: Benchmark scenarios used to evaluate video game performance may not reflect actual gameplay conditions. For example, a benchmark may focus on specific game sequences that do not necessarily represent general gameplay. As a result, the results obtained may not be fully representative of the overall gaming experience.

- No measurement of multiplayer or online play: This study focuses primarily on the performance of single-player games, and does not take into account aspects related to multiplayer or online play. Consequently, data exchanges between game servers and clients, as well as network performance, are not taken into account in the analysis. This could limit a complete understanding of hardware requirements for an optimal online gaming experience.

Conclusion

In conclusion, this study highlights the growing impact of video games on computer hardware performance. With the constant evolution of graphics and functionality, modern games require increasingly powerful hardware configurations to deliver an optimal gaming experience. This raises important questions about the sustainability and energy efficiency of computer equipment, as well as consumer hardware choices. Ultimately, it is crucial for both publishers and gamers to strike a balance between video game performance and the sustainability of the technology industry to ensure a more sustainable future.