12 reasons not to eco-design a digital service

The ecodesign of digital services has been gaining momentum in recent years. However, this is not happening without a hitch. The aim of this article is to examine the obstacles to the adoption of ecodesign. It draws heavily on Perrine Croix’s article (12 excuses de l’inaction en accessibilité et comment y répondre [FR]), which is itself adapted from an article by Cambridge University (Discourses of climate delay [PDF]).

The excuses

1. It costs too much

That’s true and it’s normal, at least for the time it takes to build up skills and implement the approach so that it becomes an integral part of the whole. But it’s also a way of reducing costs: de-prioritizing functionalities considered not very useful/used/usable because of their environmental impacts, producing a digital service that’s easier to maintain, reducing the need for infrastructure to run and store it. This is where DevGreenOps meets FinOps.

Last but not least, ecodesign is a Quality issue, which has the advantage of reducing the cost of ownership. Thus, the service can be used on a wider range of devices and is less costly to maintain, and so on.

2. It takes too much time

Yes, implementing ecodesign takes time, but continuous improvement and automation can help (a lot). Similarly, prioritization based on environmental aspects can reduce the time needed to design the digital service by limiting it to what is strictly necessary for the user: removing functionalities from the scope, simplifying user paths, etc.

3. The team doesn’t have the necessary skills

It’s indeed possible that some employees don’t yet have some ecodesign skills. However, as we often hear, much of what we need to know is based on common sense, or has already been covered for accessibility or performance. Online resources abound, whether in the form of articles, tools or sets of guidelines. What’s more, this upskilling often enhances the motivation of the people concerned, and may even be attractive to those wishing to join an organization more committed to this trajectory.

Of course, you also have the option of hiring experts in the field to help you and your employees improve their skills.

4. There’s no consensus on estimating the environmental impacts of a digital service.

That’s true, and today there are several models of environmental projection that co-exist. ADEME (the French Environment and Energy Management Agency) is currently tackling the subject from the angle of RCP (methodological guidelines on how to communicate about environmental impacts). However, the absence of consensus should not be a brake on action. Even if they have known limitations, tools and guidelines do exist. And in the broader context of climate change, action is urgently needed.

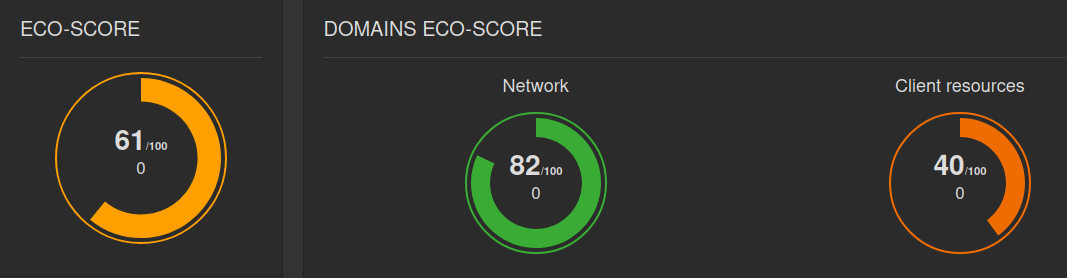

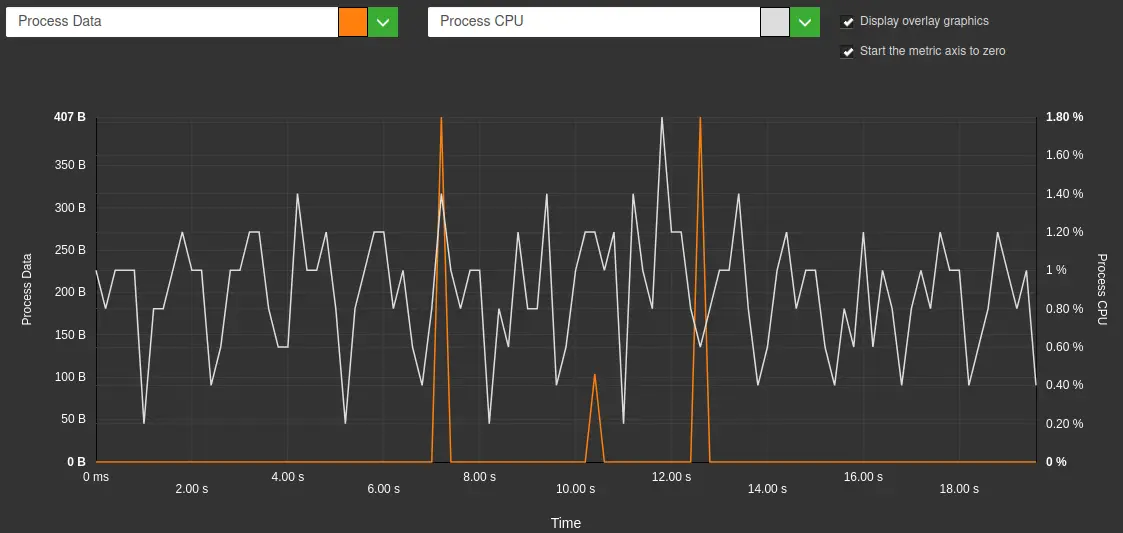

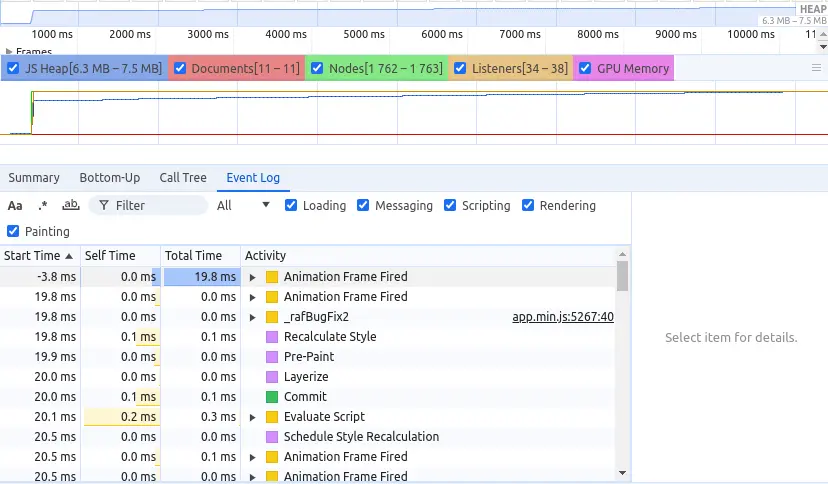

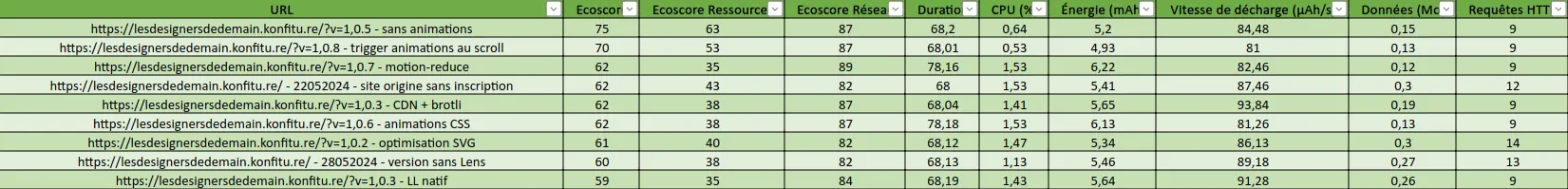

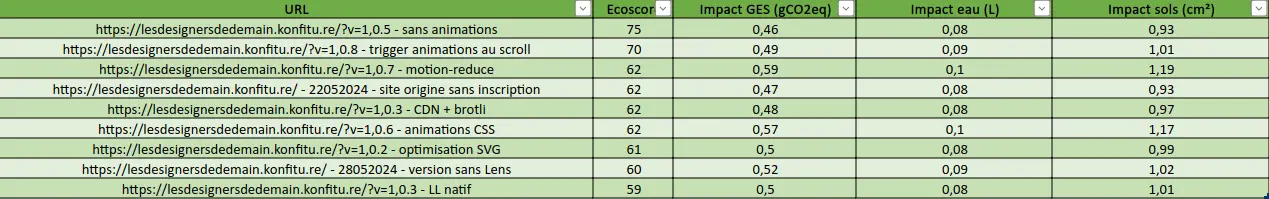

But beware: depending on the methodology and tools used, results can vary widely, and certain areas of over-consumption (and potential for optimization) may go undetected. See this article: https://greenspector.com/en/reduce-the-weight-of-a-web-page-which-elements-have-the-greatest-impact/

5. No need for ecodesign, we’re already considering performance

Ecodesign meets performance in many respects, especially when it comes to technical optimization. If you’re already heavily involved in the performance of digital services, chances are you’re helping to reduce their environmental impacts. However, there are also some areas of divergence between the two approaches. Performance is sometimes improved by delaying the loading or execution of some resources, or by anticipating their loading in case the user performs certain actions. This improvement in efficiency and perceived performance should not make us lose sight of the need for sobriety (doing less of the same thing: fewer images, for example) and frugality (giving up on something: a conversational bot based on artificial intelligence, for example).

It remains questionable to try to make a component faster that degrades accessibility, ecodesign or any other aspect linked to Responsible Digital.

Performance is only one indicator of sustainability, but it shouldn’t be the only one.

6. The best ecodesigned site is the one that doesn’t exist

That’s true, but it’s not necessarily the one that best meets the needs of your users. To make sure of this, the General Policy Framework [PDF] proposes, in its first criterion, to take into account the environmental impacts according to the need for the service (criterion 1.1 with, in particular, the Ethical Designers questionnaire [FR]). It is also important to ask yourself these questions and to take measurements before considering the redesign of a digital service (whose environmental impact could thus be degraded).

7. An ecodesigned site is bound to be ugly

This issue is also often raised for accessible websites. Both are first and foremost design constraints that can enhance creativity, and there are a growing number of examples of this. An article on this subject is available : https://greenspector.com/en/does-a-sober-site-have-to-be-ugly/

8. It’s a matter for developers

If developers are indeed concerned, they are not the only ones. Their scope of action is often limited to technical efficiency or optimization. They will often be limited in their actions by decisions that do not depend on them: limiting the number of media or third-party services, choosing a hosting provider with less impact, deleting some functionalities, etc. The impact of code is far less than that of design choices. The developer’s skills remain essential to the success of the ecodesign approach, but it is much broader than that, both in terms of the digital service’s lifecycle and the roles involved.

In addition, the developer may have a duty to advise on overconsumptions linked to functionality, ergonomics, graphic choices or even published content.

9. The environmental impacts of digital technology are negligible compared to other sectors

Studies published in recent years tend to prove the contrary, for example: https://presse.ademe.fr/2023/03/impact-environnemental-du-numerique-en-2030-et-2050-lademe-et-larcep-publient-une-evaluation-prospective.html [FR]

The environmental impact of digital technology appears non-negligible, particularly due to the impact on user terminals, the manufacture of which is critical from this point of view. What’s more, given the urgency of the climate change situation, we need to act as holistically as possible. All the more so as the benefits of ecodesign for the user experience are increasingly well documented.

10. Greenwashing

There are two aspects to consider here. For some people, ecodesign of digital services is greenwashing, because the impact of this approach is negligible. To convince you otherwise, I refer you to the previous point on the environmental impacts of digital. As far as the results of this approach are concerned, you can find testimonials and feedback on the Greenspector website: https://greenspector.com/en/resources/success-stories/

The second aspect to take into account concerns structures that fear being accused of greenwashing if they communicate on the ecodesign of digital services. This may be because these structure are also responsible for much greater environmental impacts, for example in their core business. To avoid this, it’s essential to pay close attention to communication elements. This means drawing on existing, recognized benchmarks, and making the approach and tools used, as well as the action plan, as explicit as possible. The aforementioned General Policy Framework can be used to structure your approach and build your ecodesign declaration. Furthermore, the ecodesign of digital services must not replace other efforts to reduce the environmental impact of the structure. Lastly, you’ll need to rely on ADEME’s RCP (see above) when they become available, in order to comply with the suggested elements for appropriate communication on the subject.

11. My customers aren’t interested

Users are increasingly sensitive not only to environmental issues, but also to the way in which their digital services are designed. It’s also about improving the user experience, which is an essential but unexpressed need for most of them. For example, taking action to limit phone battery discharge is an important lever for ecodesign, even though this subject is increasingly seen as a source of anxiety for users (https://www.counterpointresearch.com/insights/report-nomophobia-low-battery-anxiety-consumer-study/). Similarly, ecodesign can improve the user experience in the event of a degraded connection or an old terminal, or even help to improve accessibility. Conversely, beware of certain actions that are sometimes taken to improve the user experience without consulting them (and without taking into account the consequences on environmental impacts). For example, adding auto-play videos or carousels to improve attractiveness. More generally, communicating on ecodesign also serves to highlight expertise and interest in product quality.

12. In the absence of legal constraints on ecodesign, we prefer to prioritize accessibility

Improving the accessibility of your digital services is essential, especially as it often helps to reduce their environmental impacts. The reinforcement of obligations linked to the accessibility of digital services at European and French level marks a turning point for many structures. In particular, the scope of application has been extended, and financial penalties have been increased.

To date, there is no similar system for the ecodesign of digital services. However, ARCEP (Autorité de Régulation des Communications Electroniques, des Postes et de la Distribution de la Presse) is responsible for the aforementioned General Policy Framework, but the subject of legal obligations has been pushed directly to European level via BEREC (Body of European Regulators for Electronic Communications). It is to be hoped, therefore, that the subject will progress rapidly so that legal obligations can emerge. Perhaps some countries will take the initiative. In any case, there’s no need to wait for this type of mechanism before embarking on an ecodesign approach. Its benefits are becoming better known, documented and even measured. The aim is not only to improve the user experience, but also to enhance the skills of teams, and even to increase the attractiveness of the company for recruitment and potential customers. While ecodesign has been a differentiating factor for some years, its increasingly widespread adoption tends to make it a discriminating factor. As more and more organizations take up the subject, it’s undesirable to lag behind, whatever the reasons.

Conclusion

There may be many reasons not to engage in ecodesign, not least because the subject may seem intimidating or even non-priority. It’s important to bear in mind that ecodesign contributes to improving not only the user experience, but also other aspects of the digital service. Its integration into the project must be gradual, the essential point being to adopt a continuous improvement approach. Results won’t be perfect overnight. However, the first step can be simple and effective, and results and skills improve over time.

Laurent Devernay Satyagraha has been an expert consultant at Greenspector since 2021. He is also a trainer, speaker and contributor to the W3C’s Web Sustainability Guidelines, INR’s GR491, greenit.fr’s 115 best practices and various working groups, notably around the RGESN.