Disclaimer : This article isn’t written by a SEO expert, but by an eco-design expert inspired by a very interesting conference by Erlé Alberton How to hack Google with SEO data

Google’s SEO algorithms

Crawling, rock of the algorithm

Google SEO algoritms are based on 3 domains :

- crawling allows Google to evaluate your pages in terms of response time, technical quality;

- indexation which analyzes the content (news, richness, quality…) ;

- ranking that analyzes your site’s popularity.

Crawling is one of the most important parts, it represents how Google is going to display your pages. The Google robots (also know as GoogleBots) are going to analyze each URL and index them. This is an iterative process: bots will come back frequently to analyze again these same pages and identify potential changes.

What is Crawl budget?

The efforts made by the Google bots to analyze your website will affect the number of pages that will be referenced, the frequency of future verifications, as well as your website’s overall rating. Google’s algorithm is, indeed, dictated by a set “maximum effort limit” named crawl budegt. Google defines it as follows:

Crawl rate limit

Googlebot is designed to be a good citizen of the web. Crawling is its main priority, while making sure it doesn’t degrade the experience of users visiting the site. We call this the “crawl rate limit” which limits the maximum fetching rate for a given site. Simply put, this represents the number of simultaneous parallel connections Googlebot may use to crawl the site, as well as the time it has to wait between the fetches. The crawl rate can go up and down based on a couple of factors:

Crawl health: if the site responds really quickly for a while, the limit goes up, meaning more connections can be used to crawl. If the site slows down or responds with server errors, the limit goes down and Googlebot crawls less.

…

In other words, Google doesn’t want to spend too much time on your website, so it has time to review other sites. As a consequence, if it detects a slowness of performance, the analysis will be less extensive. All pages will not be indexed, Google will not come back as often, so as a result: your ranking will decrease.

To explain this budget phenomenon we could also say that crawling costs in server resource to Google, and this transforms in an actual cost. Google is not a philantropic institution. It is understandable that a company wants to limit its operational costs, such as crawling. In the same time it is also a way to limit the operation’s environmental impact, which is a big deal for Google.

Know where you stand

Now we proved it is necessary to watch the crawling budget and the way Google analyzes your website, it is good to know you can do so in different ways.

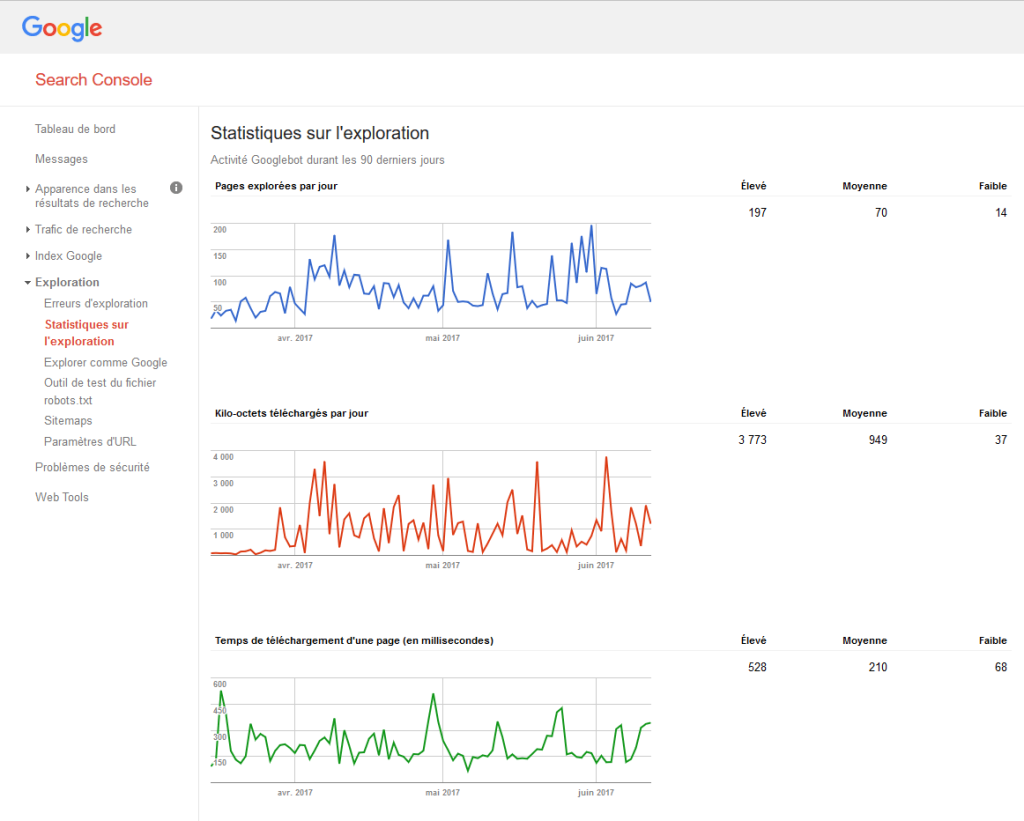

You can use the Google Search console Crawl-stat.

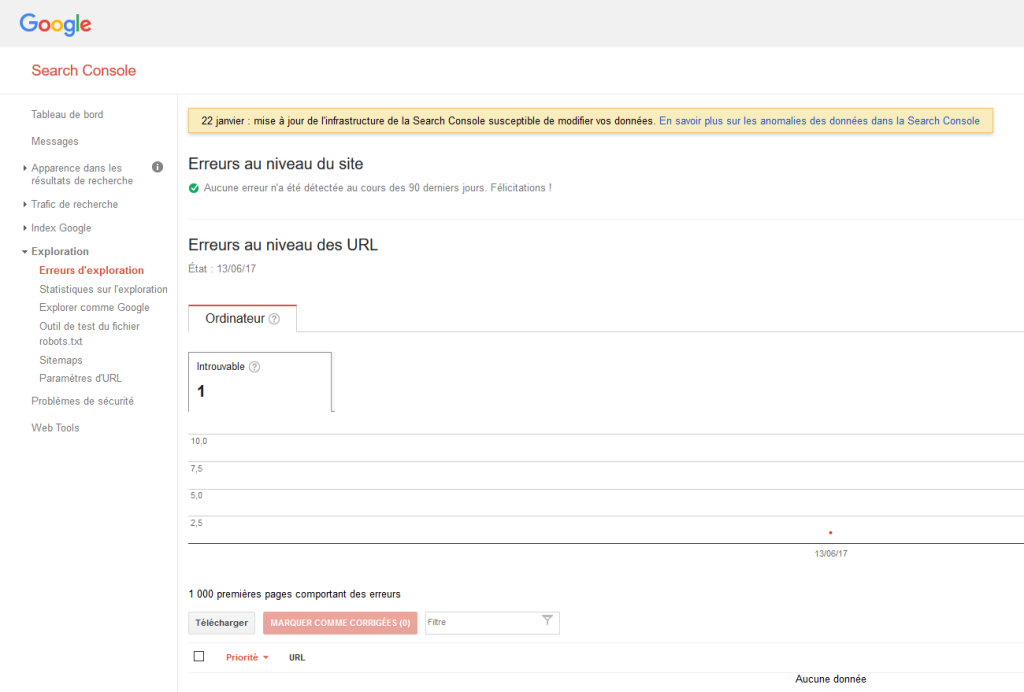

The error window Crawl-error is rather important: it will indicate you the errors the robot encounters during the crawl.

It exists several types of errors but if a page is too slow to load an error message will appear for sure (bot timeout). I shall remind you Google has better better things to do and doesn’t want to spend too much time crawling your website. You will find more information on errors here.

If you’re feeling more technical, you can: go have a look in your servers’ logs to find out what the bots did.

Here is the list of robots taken into account in Google Search console‘s crawl budget.

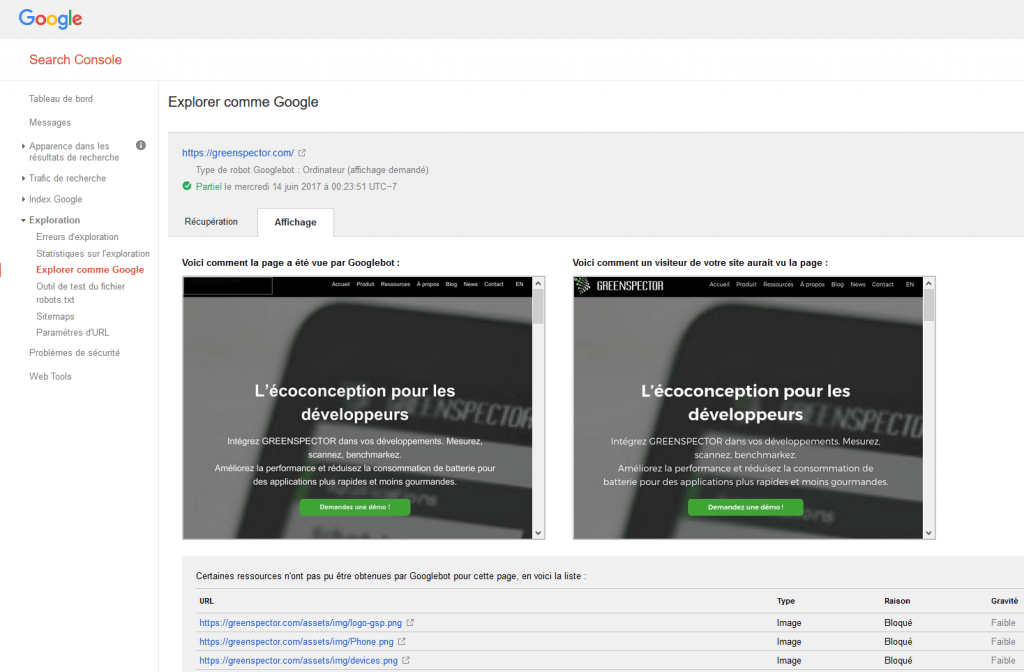

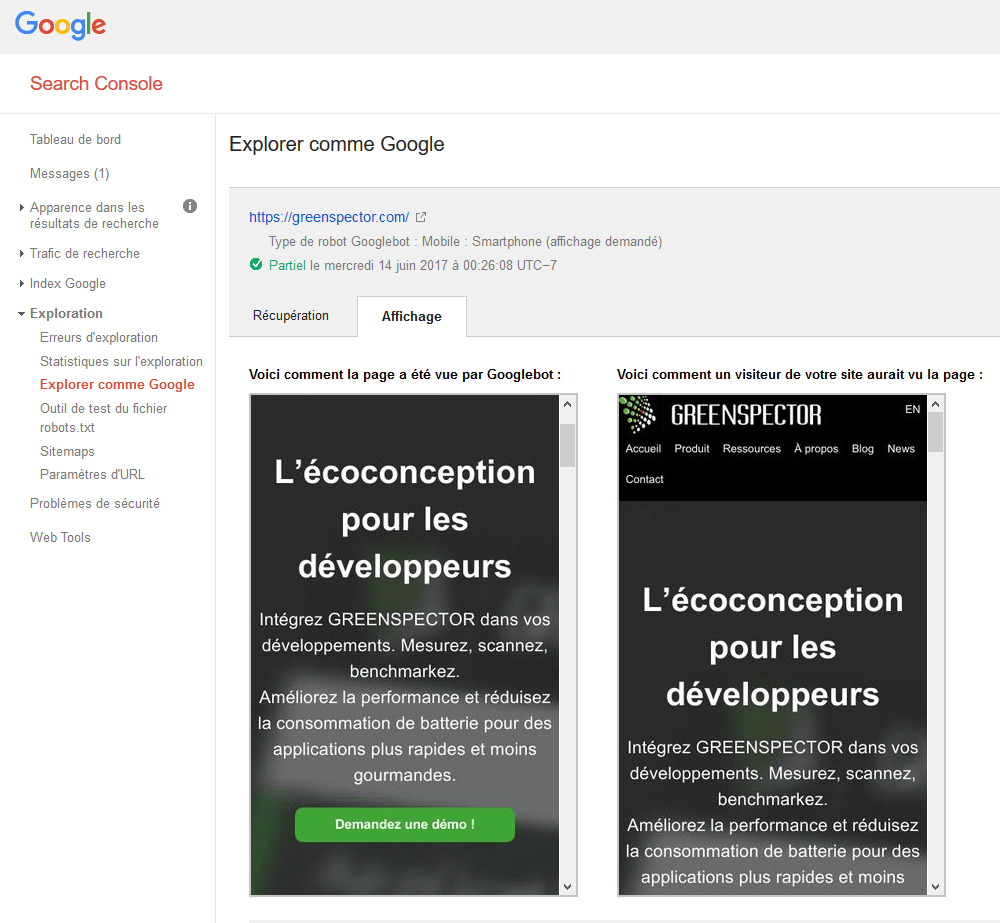

Finally, you can simulate the way Google is going to “see” your page. Head over to the Google Search console, and use the option Fetch as Google.

Now you know how Google crawls through your site and sees it. Whether the results are good or bad, referencing is never set in stone and you need to continuously work for it. We will see how to improve this.

Improve the performance

Server’s response time

As you may have understood by now, GoogleBot behaves like an actual user: if the page takes too long to load, it will give up and go on another site. A satisfying performance will lead to an increased number of pages crawled by Google. A faster loading will leave the bot with time left to crawl further more pages. As it is more deeply indexed, your website will be better referenced.

Reduce excessive page loading for dynamic page requests.

A site that delivers the same content for multiple URLs is considered to deliver content dynamically (e.g. www.example.com/shoes.php?color=red&size=7 serves the same content as www.example.com/shoes.php?size=7&color=red). Dynamic pages can take too long to respond, resulting in timeout issues. Or, the server might return an overloaded status to ask Googlebot to crawl the site more slowly. In general, we recommend keeping parameters short and using them sparingly. If you’re confident about how parameters work for your site, you can tell Google how we should handle these parameters.

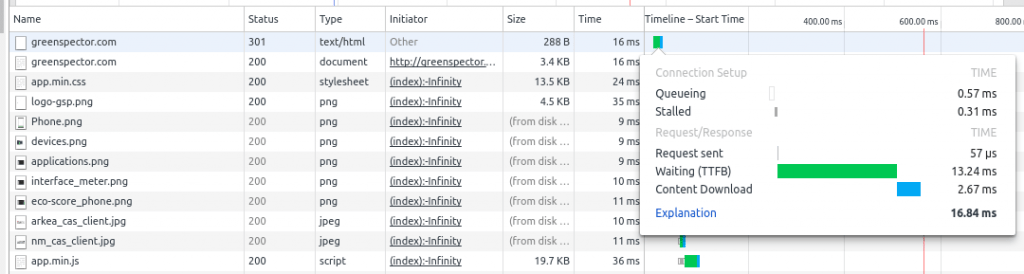

The first limit to respect: don’t exceed a certain amount of response time on the server. A “certain amount”? Pretty hard to set a threshold! However, you can at least measure the performance with an indicator like the Time To First Byte (TTFB). It represents the time between sending out the request to the client and receiving the first byte back as an answer to the request. The TTFB takes into account transfer time on the network as well as the server processing time. The TTFB is measured by the usual performance management tools. The easiest way is to use development tools embedded in browsers:

A basic threshold would be between 200 and 400 ms. Have a look at the time you get and try to decrease it. How do to so you ask? A few parameters have to be taken into account:

- Server’s configuration: If you are on a shared hosting, it is kind of hard to take actions. But my friends let me tell you, if you know the IT infrastructure Manager, go ask him!

- Request processing: For instance if you are on a CMS like WordPress or Drupal, the time it takes to generate PHP pages and have access to the database have an important impact. You can use cache systems such as W3cache or a reverse proxy like Varnish for example.

- Inefficient code on the server side: Analyze your code and apply good eco-design practices.

This way, your pages will be easier to access for the users and, more importantly, it will prevent Google from making crawl mistakes.

Display performance

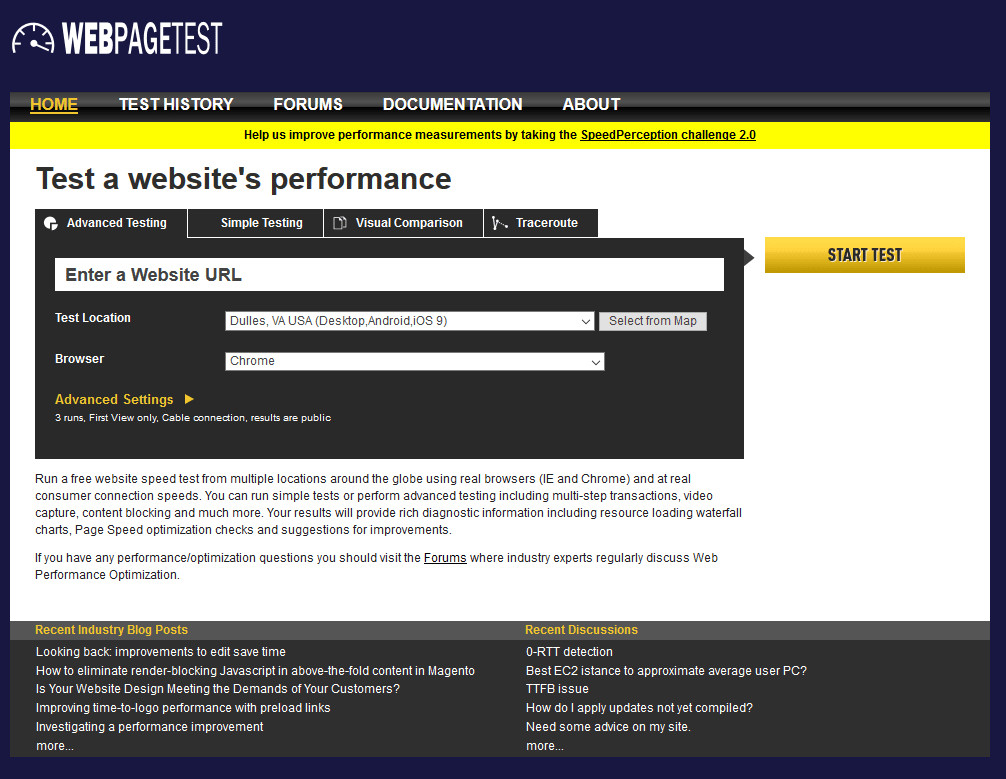

It is hard to tell how much Google bots take into account your page’s display speed but it remains an important characteristics. If the page loads in 10 seconds, bots will struggle to read the entire thing. How to measure that? Just like for the TTFB, developers’ tools come in handy. I also recommend to use Web Page Test.

The idea here is to make the page visible and usable by the user as quickly as possible. As quickly as possible, I said? The RAIL model

provides ideal times:

The letter R (for Response) in particular indicates a time inferior to 1 second.

To improve this, web performance domain has plenty good practices. Here are a few examples:

- Use the client cache pour les éléments statiques comme les CSS. Cela permet lors d’une deuxième visite d’indiquer au navigateur (et aussi aux bots) que l’élément n’a pas changé et qu’il n’est donc pas nécessaire de le recharger.

- Concatenate JSs and CSSs which would allow to reduce the amount of request. BUT this rule isn’t valid anymore if you are in HTTP2.

- Try and limit the amount of request. We witness numerous websites with over 100 requests, which is costly for the browser in terms of loading.

We’ll touch back on web performance in a next article In the end, if you make your page more efficient, it will benefit both your referencing AND your readers!

Take things further with eco-design

Pure performance limit

Focusing on performance only would be a mistake. Bots aren’t your normal users; they are machines, not humans. Web performance mainly focuses on user experience (fastest display). However, bots see further than humans, they read all details of elements (CSS, JS,…). For instance, if you focus on display performance, you can potentially apply the rule that advise to defer some javascript codes at the end of loading time. This way, the page displays quite fast and processing can keep going. But as bots aim at crawling through all elements of a page, the fact that scripts are defered won’t change a thing for them and their analyzes.

Optimizing all elements is necessary, including the ones loaded after the page is displayed. And we will pay extra attention to the use of javascript.

Google Bot can parse it if it makes effort, so each technology has to be used sparingly. For example, AJAX’s goal is to create dynamic interactions with the page: YES to forms or widgets loading… but NO if it’s only for loading content on a OnClick.

Facilitating GoogleBot’s work is pretty much working on all aspects of the site. The less elements, the easier it is. So: Keep It Simple! …

Eco-design coming to crawl’s rescue

Software eco-design mainly aims at reducing the environmental impact of software. Techniquely, it translates with the notion of eco-design: you have to answer the user’s need, by respecting the performance constraints; while limiting as much as possible the consumption of resources and energy. Software-induced power consumption (including your website) is the cornerstone of the process.

As you understood by now, bots have a budget based on a limited time capacity, but also an energy bill to limit for Google datacenters. Huh, funny! This is where we meet the goals of software eco-design: limiting this consumption. In that case, a perfromance-centered approach doesn’t fit: by trying to improve performance without keeping under control the induced resources consumption you’ll risk to get the contrary of what you first wanted.

So, what are the good practices, you may think? You can check out this blog blog and you’ll find plenty 🙂 But overall, I think no matter the best practices, what is the most important is the resources consumption. Are Progressive Web App (PWA) good for crawling? Is lazy loading good too? We don’t really care. In the end, if your website consumes little resources and is easy for the bot to “display” then it’s all good.

Energy measurement to control crawlability

One metric only encompasses all ressources consumption: energy. As a matter of fact, CPU load, network requests, graphics, etc will result in a power drain for the machine. By measuring and controlling the power consumption, you’ll be able to control the overall cost of your website.

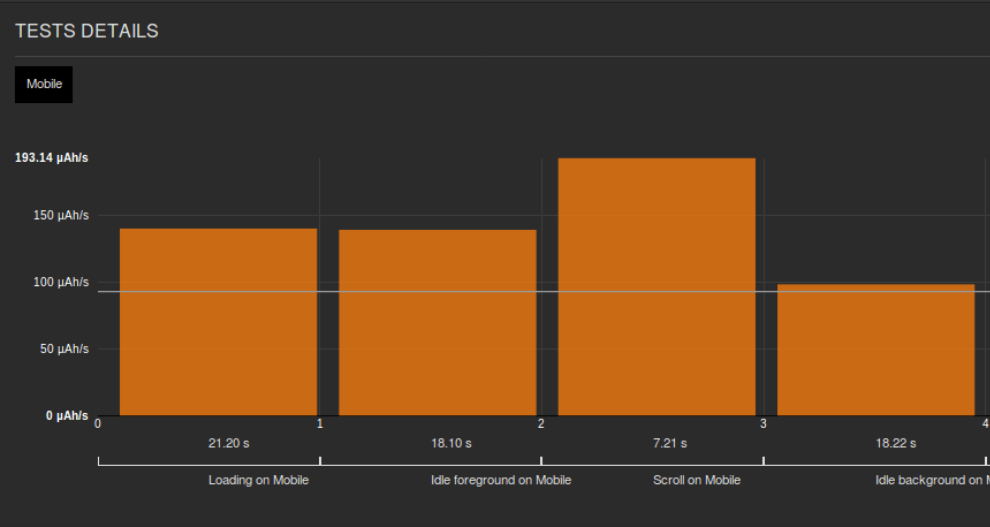

Here is an example of power consumption of the website Nantes transition énergétique. There are 3 steps: website loading, idle in foreground and idle browser in the background. Numerous eco-design best-practices have been applied . In spite of that, a slider is on and consumption in idle mode remains high, amounting to 80mWh.

As we can see, the power consumption is the justice of the peace of resources consumption. Decreasing the power consumption will enable you reduce the GoogleBot effort as well. The ideal energy budget of a page loading is 15 mWh: it represents the value worth the Green Code Label “Gold” level. As a reminder, even though best-practices were applied, consumption was still reaching 80 mWh. After further analyzes and discussions with developers and project manager, the slider was replaced by a random display of static images: consumption dropped below 15 mWh!

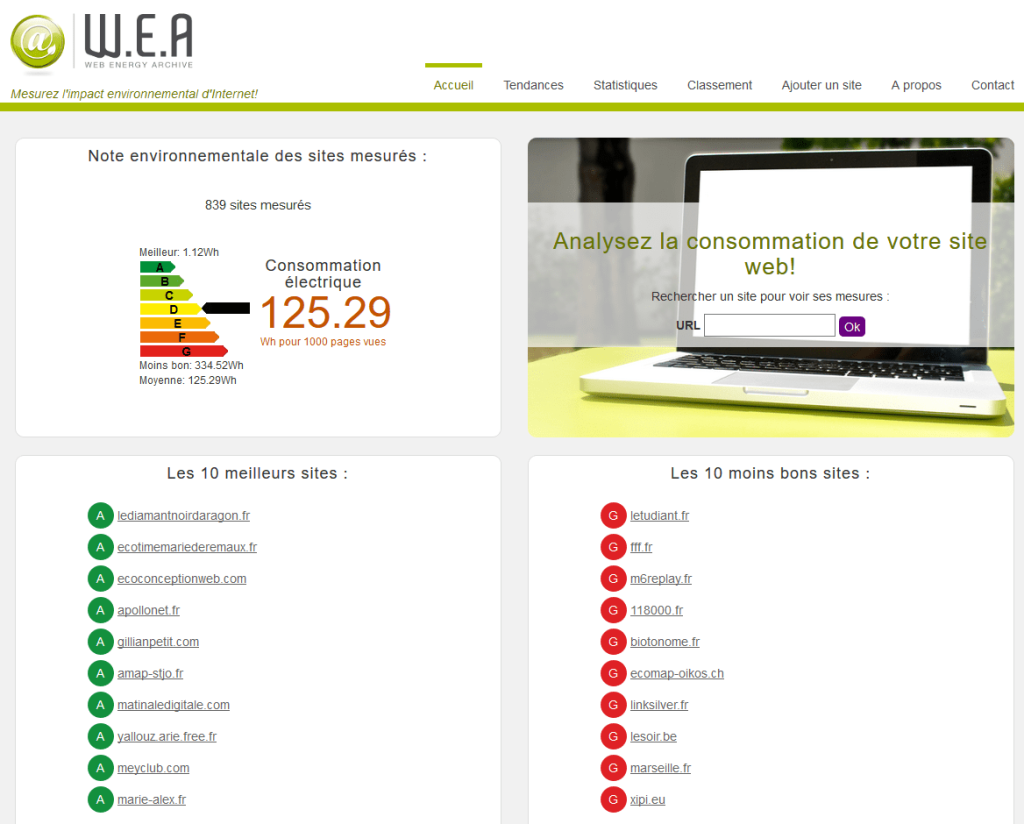

In the meantime, while you wiat to get your label 🙂 you can measure for free your website’s consumption on Web Energy Archive, an associative project we proudly took part in.

Functionalities eco-design

Improving your page’s consumption and efficiency is good, however, it might not be enough. Next step in the process is to optimize the user path. The Bot is a user, with a limited resources budget, that needs to go through a maximum of your website’s URLs with that budget. If your website is too complex (too many URLs, too deep…) the machine won’t read the whole site, or at least not in one time only.

It is a necessity to make the user path simpler. And again, we are back at applying eco-design principles. You have to think, what are the functionalities a user really need? Because if you integrate useless functionalities, both the user and the bot will waste resources at the expense of elements that are actually important. Numerous analytics tools, animations all over… ask yourself if those functionalities are really worth it because the bot is going to anlayze them. And this will probably be done at the expense of your actual content that is indeed rather important.

For the user, it works the same: if getting an information or service requires too many clicks, it will be discouraged. An efficient path architecture will help the bot, as well as the user. Have a look at the sitemap to analyze the site.

Finally, applying eco-design to pages won’t be efficient if your site is complex. For instance, it is possible that, with numerous dynamic URLs, a good number of URLs will be detected by Google. As a consequence, you will get doubled URLs, useless content… A little cleaning of the site’s architecture might be necessary?

Anticipating Google’s Mobile First algorithms

Taking into account new platforms

Google is willing to improve the users’ search experience, and is taking into account the users’ latest uses. The number of mobile users now exceeding the desktop users, Google algorithms focuses on Mobile First. Calendar of changes isn’t very clear at the moment but there are signs.

You can anticipate the way Google crawls your site, again, via the Search Crawl console, with the option Fetch as Mobile.

Google also offers a testing site in orer to know whether or not your website is mobile responsive.

Taking into account these #MobileFirst constraints isn’t necessarily an easy task. It might take some time and efforts, but this is the price to pay if you want to get a good referencing and ranking in the next few months. Plus, your users will be grateful for a website that doesn’t consume so much smartphone battery anymore!

Taking it further

Let’s quote Google :

Googlebot is designed to be a good citizen of the web.

As a developer or site owner, your ambition is to become, at least, a web citizen as good as the Google bot. To do that, imagine using your website on a mobile platform, in specific conditions such as poor service or older device. By decreasing your site’s resources consumption, not only you will please the Google bot (that will reward you with a better referencing), but you will also provide the whole world (including “richer” countries) with an easy access to your website.

Don’t think this approach is charity. Let’s remind ourselves Facebook and Twitter already apply this with the “Light” versions of their respective apps.Example : Facebook Lite.

A website that is both mobile responsive and takes into account all mobile platforms and all connexion speeds should be valued by Google some time soon. Plus, the lighter the website, the less resources Google has to consume.

Just like for the user, the consumption and more importantly the environmental impact is becoming more and more important:

- Mandatory reports on sustainable development

- Pressure from NGOs to reduce its impacts, like the Greenpeace report

One thing for sure, a website that is efficient and focusing on users’ needs will be better valued on Google’s SEO ranking (and the others’).

Conclusion

Google referencing of your website is partly based on crawling: the robot goign through your pages. In order to do that, it has a set time and resources budget. If your pages are too heavy, too slow, crawling won’t be complete and your site will suffer from a bad referencing.

To avoid that phenomenon, frequently evaluate your site’s “crawlability”. Then, implement a progress process. To start, try and have a look at what you can get from the usual performance tools.

Then, think in terms of Mobile First: apply eco-design principles. Simplify the user path, reduce or eradicate useless functionalities. Measure your power consumption and control it. After a few efforts you will get a better referenced website, that is more efficient and better liked by the users.

Today, too many website are on the dark side of overconsumption (too many requests, useless scripts…). It is urgent people anticipate the evolution of Google’s referencing algorithms, that now have the same objectives as users while asking for more performance AND less power consumption: overall, efficiency.