The world of software is bad and if we do not act, we may regret it. Environment, quality, exclusion … Software Eats The World? Yes a little too much.

The software world is bad. Well, superficially, everything is fine. How could a domain with so many economic promises for the well-being of humanity go wrong? Asking ourselves the question could be a challenging of all that. So everything is fine. We are moving forward, and we are not asking ourselves too much.

The software world is getting bad. Why? 20 years of experience in the software world as a developer, researcher or CTO have given me the chance to rub shoulders with different fields and to have this feeling that it is growing year by year. I have spent the last 6 years especially trying to push practices, software quality tools to educate developers about the impact of the software on the environment. You have to be severely motivated to think about improving the software world. Good practices do not pass as easily as the new Javascript framework. The software world is not permeable to improvements. Or at least only those superficially, not deep ones.

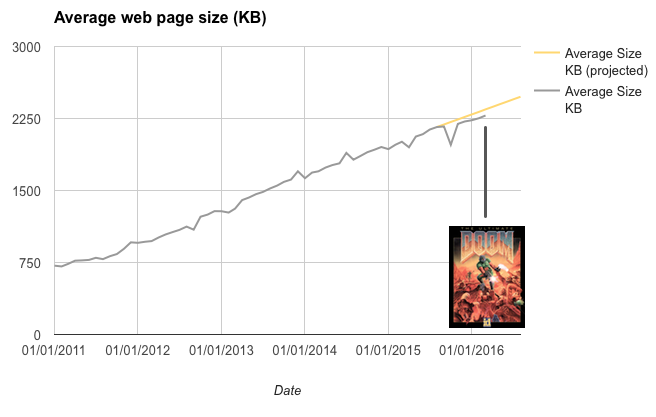

The software world is getting bad. Everything is slow, and it’s not going in the right way. Some voices are rising. I invite you to read “Software disenchantment”. Everything is unbearably slow, everything is BIG and “BLOAT”, everything ends up becoming obsolete…The size of websites explodes. A website is as big as the Doom game. The phenomenon affects not only the Web but also the IoT, the mobile … Did you know? It requires 13% CPU When Idle Due to Blinking Cursor Rendering….

This is not the message of an old developer tired by the constant evolutions and nostalgic of the good old days of floppy disk … It is rather a call to a deep questioning of the way we see and develop the software. We are responsible for this “non-efficiency” (developers, project managers, salespeople …). To say that everything is fine would not be reasonable, but to say that everything is going wrong without proposing any improvement would be even more so.

Disclaimer: You will probably jump, call FUD, troll, contradict … reading this article. It’s fine but please, go all the way!

We’re getting fat (too much)

Everything grows: the size of the applications, the amount of data stored, the size of the web pages, the memory of the smartphones … Phones now have 2 GB of memory, exchange a photo of 10 MB by mail is now common… It might not be an issue if all software was used, effective and efficient … But this is not the case, I let you browse the article “The disenchantment of the software” to have more detail. It is difficult to say if many people have this feeling of heaviness and slowness. And at the same time, everyone has got used to that. It’s computer science. Like the bugs, “your salary has not been paid? Arghhh… it must be a computer bug”. IT is slow, and we can not help it. If we could do anything about it, we would have already solved the problem.

So everyone get used to slowness. All is Uniformly Slow Code. We sit on it and everything is fine. Be effective today is to reach a user feeling that corresponds to this uniform slowness. We get rid of things that might be too visible. A page that takes more than 20 seconds to load is too slow. On the other hand, 3 seconds is good. 3 seconds? With the multicores of our phones / PCs and data centers all over the world, all connected by great communication technologies (4G, fiber …), it’s a bit weird? If we look at the riot of resources for the result, 3 seconds is huge. Especially since the bits circulate in our processors with time units of the level of nanosecond. So yes, everything is uniformly slow. And that suits everyone (at least, in appearance.) Web performance (follow the hashtag #perfmatters) is necessary but it is unfortunately an area that does not go far enough. Or maybe the thinking in this area can not go further because the software world is not permeable enough or sensitive to these topics.

There are now even practices that consist not to solve the problem but to work around it, and this is an area in its own right: to work on “perceived performance” or how to use the user’s perception of time to put in place mechanisms so that they don’t need to optimize. The field is fascinating from a scientific and human point of view. From the point of view of performance and software efficiency, a little less. “Let’s find plenty of mechanisms to not optimize too much!”

All of this would be acceptable in a world with mediocre demands on the performance of our applications. The problem is in order to absorb this non performance, we scale. Vertically by adding ultra-powerful processors and more memory, horizontally by adding servers. Thanks to virtualization that allowed us to accelerate this arms race! Except that under the bits, there is metal and the metal is expensive, and it is polluting.

Yes, it pollutes: it takes a lot of water to build electronic chips, chemicals to extract rare earths, not to mention the round trips around the world … Yes, the slow uniformity still has a certain cost. But we will come back to it later.

It is necessary to go back to more efficiency, to challenge hardware requirements, to redefine what is performance. As long as we are satisfied with this slowness uniformity with new solutions which won’t slow down more (like the addition of equipment), we won’t move forward. The technical debt, a notion largely assimilated by development teams, is unfortunately not adapted to this problem (we will come back to this). We are on a debt of material resources and bad match between the user need and the technical solution. We are talking here about efficiency and not just about performance. Efficiency is a story of measuring waste. The ISO defines Efficiency with the domain: Time behaviour, Resource utilization and Capacity. Why not push these concepts further more?

We are (too) virtual

One of the problems is that the software is considered “virtual”. And this is the problem: “Virtual” defines what has no effect (“Who is only in power, in a state of mere possibility as opposed to what is in action” according to Larousse). Maybe it comes from the early 80s when the virtual term was used to speak about Digital (as opposed to the world of the Hardware). “Numeric” is relative to the use of numbers (the famous 0 and 1). But finally, “Numeric”, it’s not enough and it includes a little too much material. Let’s use the term Digital! Digital / Numeric is a discussion in France that may seem silly but is important in the issue we are discussing. Indeed, the digital hides even more this material part.

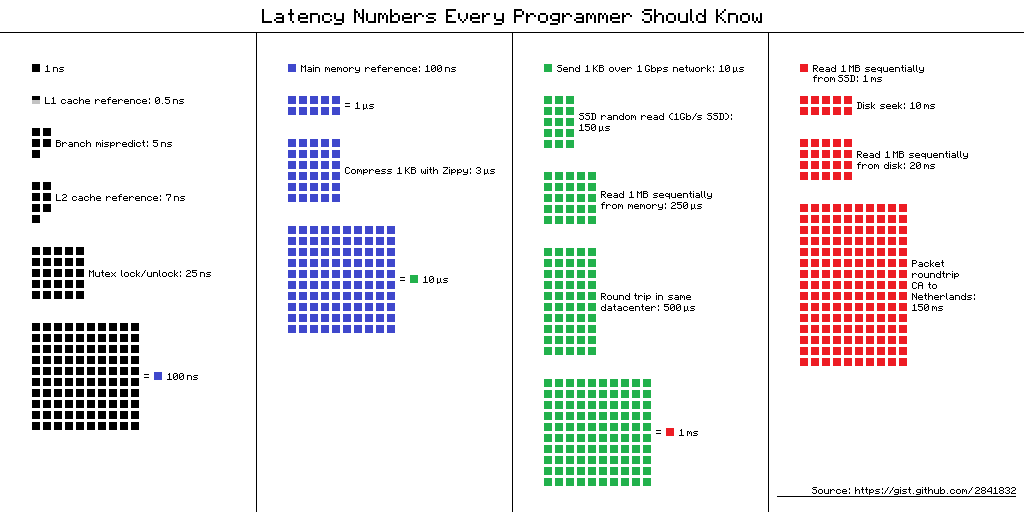

But it should not be hidden: digital services are well composed of code and hardware, 0 and 1 that circulate on real hardware. We can’t program without forgetting that. A bit that will stay on the processor or cross the earth will not take the same time, or use the same resources:

Developing a Java code for a J2EE server or for an Android phone, it’s definitly not the same. Specific structures exist to process data in Android but common patterns are still used. The developers have lost the link with the hardware. It’s unfortunate because it’s exciting (and useful) to know how a processor works. Why: abstraction and specialization (we’ll see this later). Because by losing this insight, we lose one of the forces of development. This link is important among hackers or embedded computing developers but unfortunately less and less present among other developers.

Devops practices could respond to this loss of link. Here, it’s the same, we often do not go all the way of it: usually the devops will focus on managing the deployment of a software solution on a mixed infrastructure (hardware and few software). It would be necessary to go further by going up for instance consumption metrics, by discussing the constraints of execution … rather than to “scale” just because it is easier.

We can always justify this distance from the material: productivity, specialization … but we must not mix up separation and forgetting. Separate trades and specialize, yes. But forget that there is material under the code, no! A first step would be to give courses on materials in schools. It is not because a school teaches about programming that serious awareness of the equipment and its operation is not necessary.

We are (too) abstract

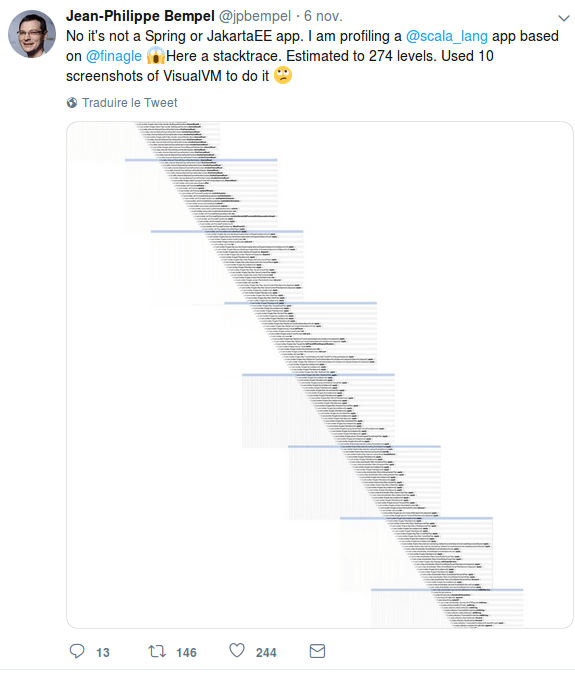

We’re too much virtual and far from the material because one wanted to abstract oneself from it. The multiple layers of abstraction have made it possible not to worry about the material problems, to save time … But at what price? That of heaviness and forgetting the material, as we have seen, but there’s much more. How to understand the behavior of a system with call stacks greater than 200? :

Some technologies are useful but are now regulary used. This is the case, for example, of ORM which have become systematic. No reflection is made on its interest at the beginning of the projects. Result: we added an overlay that consumes, that must be maintained and developers who are no longer used to perform native queries. That would not be a problem if every developer knew very well how the abstraction layers work: how does HIBERNATE work for example? Unfortunately, we rely on these frameworks in a blind way.

This is very well explained in the law of Joel Spolsky “The Law of Leaky Abstractions”

And all this means that paradoxically, even as we have higher and higher level programming tools with better and better abstractions, becoming a proficient programmer is getting harder and harder. (…) Ten years ago, we might have imagined that new programming paradigms would have made programming easier by now. Indeed, the abstractions we’ve created over the years do allow us to deal with new orders of complexity in software development that we didn’t have to deal with ten or fifteen years ago (…) The Law of Leaky Abstractions is dragging us down.

We believe (too much) in a miracle solution

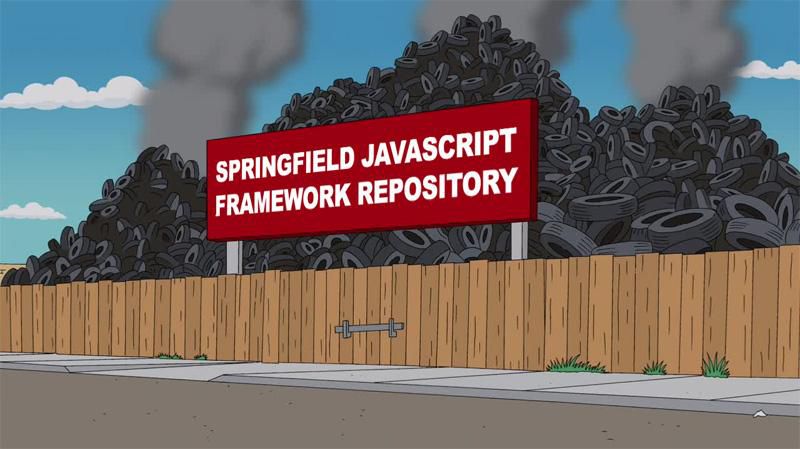

The need for abstraction is linked to another flaw: we are still waiting for miracle tools. The silver bullet that will further improve our practices. The ideal language, the framework to go even faster, the tool of miracle management of dependencies … It is the promise each time of a new framework: to save time in development, to be more efficient … And we believe in it, we run into it. We give up the frameworks on which we had invested, on which we had spent time … and we go to the newest one. This is currently the case for JS frameworks. The history of development is paved with forgotten frameworks, not maintained, abandoned … We are the “champions” that reinvent what already exists. If we kept it long enough, we would have the time to master a framework, to optimize it, to understand it. But this is not the case. And don’t tell me that if we had not repeatedly reinvented the wheel, we would still have stone wheels… Innovate would be to improve the existing frameworks.

This is also the case for package managers: Maven, NPM … In the end, we come to hell. The link with abstraction? Rather than handling these dependencies hard, we put an abstraction layer that is the package manager. And the edge effect is that we integrate (too) easily external code that we do not control. Again we will come back to it.

On languages, it’s the same story. Warning, I don’t advocate to stay on assembler and C … This is the case for instance in the Android world, for over 10 years developers have been working on tools and Java frameworks. And like that, by magic, the new language of the community is Kotlin. We imagine the impact on existing applications (if they have to change), we need to recreate tools, find good practices … For what gain?

Today the Android team is excited to announce that we are officially adding support for the Kotlin programming language. Kotlin is a brilliantly designed, mature language that we believe will make Android development faster and more fun Source

We will come back later to the “fun” …

Honestly, we do not see any slowdown in technology renewal cycles. It’s always a frenetic pace. We will find the Grail one day. The problem is then the stacking of its technologies. Since none of them really dies and parts are kept, we develop other layers to adapt and continue to maintain these pieces of code or these libraries. The problem is not the legacy code, it is the glue that we develop around fishing. Indeed, as recited the article on ” software disenchantment “:

@sahrizv :

2014 – #microservices must be adopted to solve all problems related to monoliths.

2016 – We must adopt #docker to solve all problems related to microservices.

2018 – We must adopt #kubernetes to solve all the problems with Docker.

In the end, we spend time solving internal technical problems, we look for tools to solve the problems we add, we spend our time adapting to its new tools, we add overlays (see previous chapter … ) … and we didn’t improve the intrinsic quality of the software or the needs that must be met.

We do not learn (enough)

In the end, the frantic pace of change does not allow us to stabilize on a technology. I admit that as an old developer that I am, I was discouraged by the change Java to Kotlin for Android. It may be for some real challenges, but when I think back to the time I spent on learning, on the implementation of tools .. We must go far enough but not from scratch. It is common, in a field, to continually learn and be curious. But it remains in the iteration framework to experiment and improve. This is not the case in programming. In any case in some areas of programming, because for some technologies, developers continue to experiment (.Net, J2EE ..). But it’s actually not that fun…

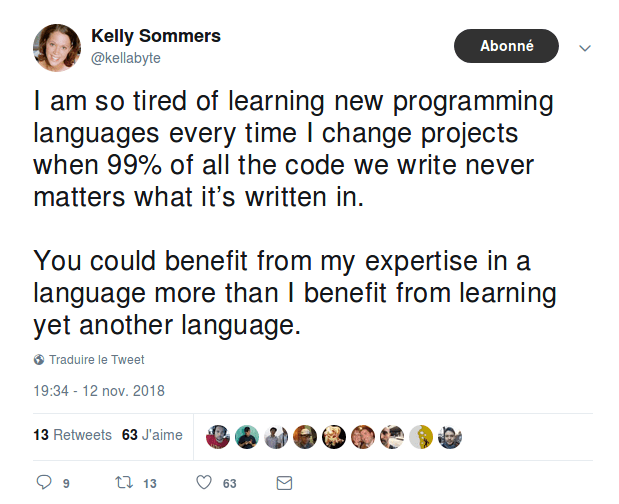

Finally, we learn: we spend our time on tutorials, getting started, conferences, meetups … To finally experiment only 10% in a project side or even a POC, which will surely become a project and production.

As no solution really dies, new ones come … we end up with projects with multitudes of technologies to manage along with the associated skills too … The, we’re surprised that the market of the recruitment of developer is plugged. No wonders… There are a lot of developers but it’s difficult to find a React developer with 5 years of experience who knows the Go language. The market is split, like the technologies. It may be good for developers because it creates rareness and it raises prices, but it’s not good for the project!

To return to the previous chapter (Believe in miracle tools …), we see in the Javascript world with the “JS fatigue”. The developer must make his way into the world of Javascript and related tools. This is the price of the multitude of tools. This is an understandable approach (see for example a very good explanation of how to manager it). However this continuous learning of technologies create the problem of learning transverse domains: accessibility, agility, performance … Indeed, what proves us that the tools and the languages that we will choose won’t change in 4 years ? Rust, Go … in 2 years? Nothing tends to give a trend.

We have fun (too much) and we do not recover (enough) in question

Unless it is in order to put a technology in question to find another. The troll is common in our world (and, I confess, I use it too). But it is only to put one technology in question for another. And continue the infernal cycle of renewal of tools and languages. A real challenge is to ask ourselves sincerely: are we going in the right direction? Is what I do sustainable? Is it quality? But questioning is not easy because it is associated with either the troll (precisely or unfairly) or a retrograde image. How to criticize a trend associated with a technological advance?

The voices rise little against this state of facts: The disenchantment of the software, Against software development… and it’s a shame because questioning is a healthy practice for a professional domain. It allows to “perform” even more.

We do not question because we want to have fun. Fun is important, because if you get bored in your job, you will be depressed. By cons, we can not, under the pretext of wanting fun all the time, change our tools continuously. There is an imbalance between the developer experience and the user experience. We want fun, but what will it really bring to the user? A product more “happy”? No, we are not actors. One can also criticize the effort that is made to reduce the build times and other developer facilities. This is important but we must always balance our efforts: I accelerate my build time but it is only valid if I use the time gained to improve the user experience. Otherwise it is only tuning for his own pleasure.

It is necessary to accept criticism, to self-criticize and to avoid hiding behind barriers. The technical debt is an important concept but if it is an excuse to make bad refactoring and especially to change to a new fashionable technology, as much to acquire debt. We must also stop the chapel wars. What is the aim of defending one’s language from another? Let’s stop repeating that “premature optimization is the cause of all the evils…” This comes from the computer science of the 70s where everything was optimized. However, there is no more premature optimization, it is only an excuse to do nothing and continue like that.

We are (badly) governed

We’re not questioning ourselves about the ethics of our field, on its sustainability… This may be due to the fact that our field does not really have an ethical code (such as doctors or lawyers). But are we as truly free developers if we can not have a self-criticism? We may be enslaved to a cause brought by other people? The problem is not simple but we have in all cases a responsibility. Without an ethical code, the strongest and most dishonest is the strongest. The buzz and the practices to manipulate the users are more and more widespread. Without Dark Pattern your product will be nothing. The biggest (GAFA …) did not made it for nothing.

Is the solution political? We have to legislate to better govern the world of software. We see it with the latest legislative responses to concrete problems: GDPR, cookies and privacy notifications … the source of the problem is not solved. Maybe because politicians do not really understand the software world.

It would be better if the software world was structured, put in place a code of ethics, self-regulate … But in the meantime, it is the rule of the strongest that continues… At the expense of a better structuring, a better quality, a real professionalisation…

If this structuring isn’t done, the developers will lose the hand on what they do. But the lack of ethics of the profession is externally criticized. Rachel Coldicutt (@rachelcoldicutt) director of DotEveryOne, a UK think tank that promotes more responsible technology, encourages non-IT graduates to learn about these issues. To continue on this last article, it would be in the right way of computer science, domain from the military world where engineers and developers would be trained to follow decisions and commands.

A statement that echoes, in particular, the one held by David Banks (@da_banks) in the insolent “The Baffler”. D.Banks emphasized how the world of engineering is linked to authoritarianism. The reason is certainly to look at the side of the story. The first engineers were of military origin and designed siege weapons, he recalls quickly. They are still trained to “connect with the decision-making structures of the chain of command”. Unlike doctors or lawyers, engineers do not have ethical authorities who oversee them or swear an oath to respect. “That’s why the engineers excel in the outsourcing of reproaches”: if it does not work, the fault lies with all the others (users, managers …) “We teach them from the beginning that the most moral thing that they can do is build what they are told to build to the best of their abilities, so that the will of the user is carried out accurately and faithfully.”

With this vision, we can ask ourselves the question: can we integrate good practices and ethics in a structural and internal way in our field?

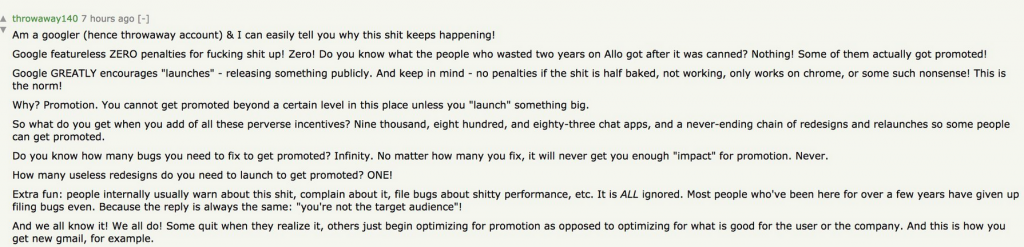

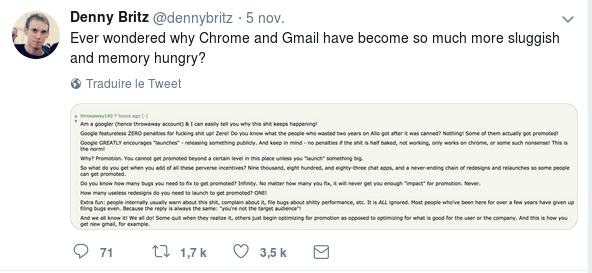

Development follows (too much) like any organization of absurd decisions

The world of software integrates into a traditional organizational system. Large groups, outsourcing via Digital or IT Companies, web agencies … All follow the same techniques of IT project management. And everyone goes “in the wall”. No serious analysis is made on the overall cost of a software (TCO), on its impact on the company, on its profit, its quality … It’s the speed of release (Time to Market), the “featural” overload (functional), immediate productivity, that matter. First because the people outside this world know too little about the technicality of the software and its world. It is virtual so simple. But this isn’t the case. Business schools and other management factories do not have development courses.

We continue to want to quantify IT projects as simple projects while movements like the no estimate propose innovative approaches. Projects continue to fail: the chaos report announces that just 30% of projects are doing well. And in the face of this bad governance, technical teams continue to fight against technologies. Collateral damage: quality, ethics, environnement… and ultimately the user. It would not be so critical if the software did not have such a strong impact on the world. Software eats the world … and yes, we eat it …

One can ask the question of the benevolence of the companies: are they only interested in their profit, whatever is the price, and leave the world of the software in this doldrums? The answer may come from sociology. In his book “Les Decisions Absurdes” Christian Morel explains that individuals can collectively make decisions that go totally the other way from the purpose. In particular, the self-legitimization of the solution.

Morel explains this phenomenon with the “Kwai River Bridge” where a hero builds a work with zeal for his enemy before destroying it.

This phenomenon of the “Kwai River Bridge”, where action is self-legitimized, where action is the ultimate goal of action, exists in reality more than one might think. He explains that decisions are meaningless because they have no purpose other than the action itself. “It was fun”: this is how business executives express themselves with humor and relevance when one of them has built a “bridge of the Kwai River” (…) Action as a goal in itself supposes the existence of abundant resources (…) But when the resources are abundant, the organization can support the cost of human and financial means which turn with the sole objective of functioning “. And, in the world of software, it globally provides the means to operate: gigantic fundraising, libraries that allow to release very quickly, infinite resources … With this abundance, we build a lot of Bridges of the Kwai River.

In this context, the developer is responsible for abundance direction that he follows.

The development is (too) badly controlled

If these absurd decisions happen, it is not only the fault of the developer but of the organization. And who says organization says management (sub-different form). If we go back to Morel’s book, he speaks of a cognitive trap in which managers and technicians often fall. This is the case for the Challenger shuttle, which was launched despite the knowledge of the problem of a faulty seal. The managers underestimated the risks and the engineers did not prove them. Everyone blamed the other for not providing enough scientific evidence. This is often what happens in companies: warnings are raised by some developers but the management does not take them seriously enough.

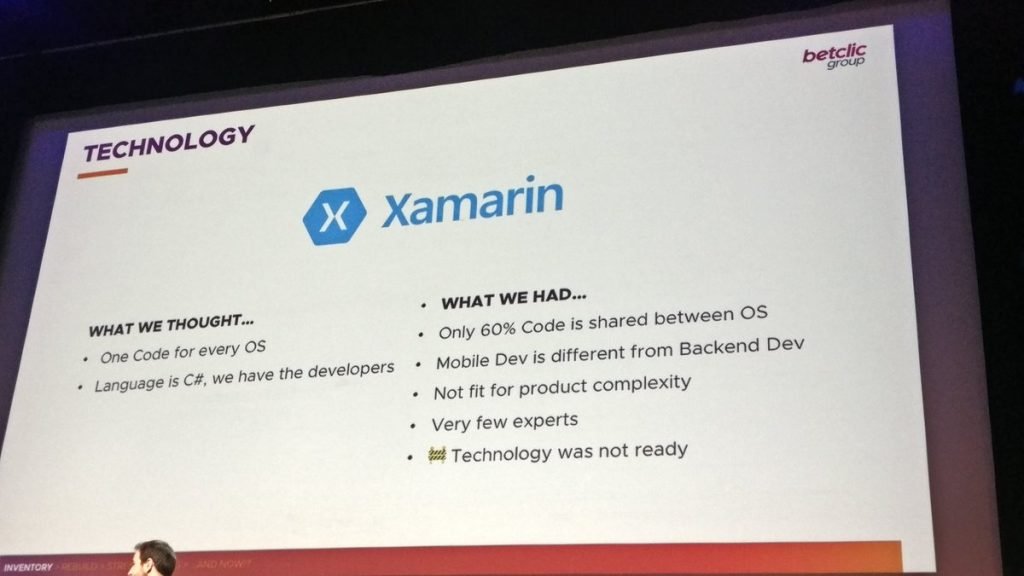

This has also happened in many organizations that have wanted to quickly develop universal mobile applications. In this case, the miracle solution (we come back to it) adopted by the decision-makers was the Cordova framework: no need to recruit specialized developers iOS and Android, ability to recover web code … The calculation (or not) simple showed that benefits. On the other hand, on the technical side, it was clear that native applications were much simpler and more efficient. 5 years later, the conferences are full of feedback on failures of this type of project and the restart from scratch of them in native. The link with Challenger and the cognitive traps? The management teams had underestimated the risks, the actual cost and did not take into account the comments of the technical teams. The technical teams had not sufficiently substantiated and proved the ins and outs of such a framework.

At the same time, we return to the previous causes (silver bullet, we have fun …), it is necessary to have a real engineering and a real analysis of technologies. Without this, the technical teams will always be unheard by the management. Tools and benchmark exist but they are still too little known. For example, Technologie Radar that classifies technologies in terms of adoption..

It is at the same time important that the management of the companies stops thinking that the miracle solutions exist (one returns to the cause of the “virtual”). You really have to calculate the costs, the TCO (Total Cost of Ownership) and the risks on the technology choices. We continue to choose BPM and Low-code solutions that generate code. But the hidden risks and costs are important. According to ThoughtWorks:

“Low-code platforms use graphical user interfaces and configuration in order to create applications. Unfortunately, low-code environments are promoted with the idea that this means you no longer need skilled development teams. Such suggestions ignore the fact that writing code is just a small part of what needs to happen to create high-quality software—practices such as source control, testing and careful design of solutions are just as important. Although these platforms have their uses, we suggest approaching them with caution, especially when they come with extravagant claims for lower cost and higher productivity.”

We divide (too much) … to rule

This phenomenon of absurd decision is reinforced by the complex fabric of software development: Historically out-of-digital companies outsource to digital companies, IT Companies outsource to freelancers … The sharing of technical responsibility / management is even more complex and absurd decisions more numerous.

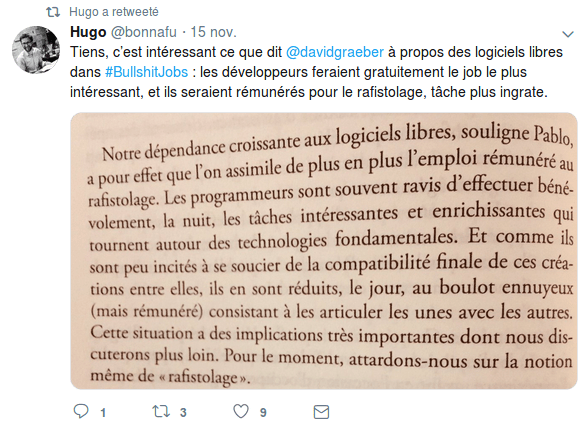

But this does not end here. We can also see the use of open-source as a sort of outsourcing. Same for the use of framework. We are just passive consumers, we are free of many problems (which have an impact on resources, quality …).

This is all the easier as the field is exciting and the practice of side-projects, time spent on open-source projects outside office hours is common … The search for “fun” and the time spent then benefit more organizations than developers. It is difficult in this case to quantify the real cost of a project. And yet, that would not be a problem if we came up with software “at the top”. This does not change the quality, on the contrary, the extended organization that is composed of the bulk of groups, IT companies, freelancers, communities has no limit to build the famous bridges of the Kwai River.

The developer is no longer a craftsman of the code, but rather a pawn in a system criticizable from the human point of view. This is not visible, everything is fine and we have fun. In appearance only, because some areas of software development go further and make this exploitation much more visible: The field of video games where the hours are exploding.

A better professionalization, a code of ethics or anything else would be useful in this situation. Indeed, this would make it possible to put safeguards on overtaking or practices (directly or indirectly) open to criticism. But I’ve never heard of the developer corporation or other rally that would allow this code defense.

We lose (too) often the final goal: the user

And so, all these clumsiness (too heavy software, no quality …) are found among users. As we have to release software as quickly as possible, that we do not try to solve internal inefficiencies, and that we do not put more resources to make quality, we make mediocre software. But we have so many tools for monitoring and monitoring users to detect what is happening directly at home in the end, we think it does not matter. It would be a good idea if the tools were well used. But the multitude of information collected (in addition to the bugs reported by users) is only weakly used. Too much information, difficulty to target the real source of the problem … we get lost and in the end, it is the user who suffers. All software is now in beta testing. What’s the point of over-quality, as long as the user asks for it? And we come back to the first chapter: a software that is uniformly slow… and poor.

By taking a step back, everyone can feel it every day in the office or at home. Fortunately, we are saved by the non-awareness of users in the software world. It is a truly virtual and magical world that they are used to. We put them in hand tools but without an explanatory note. How to evaluate the quality of a software, the risks on the environment, the security problems… if one does not have notions of computer science, even rudimentary ones?

21st century computer science is what agribusiness was for consumers in the 20th century. For reasons of productivity, we have pushed mediocre solutions with a short-term computation: placing on the market more and more fast, profit constantly rising… intensive agriculture, junk food, pesticides … with significant impacts on health, on the environment… Consumers now know (more and more) the disastrous consequences of these excesses, the agri-food industry must reinvent themselves, technically, commercially and ethically. For software, when users understand the ins and outs of technical choices, the software industry will have to deal with the same issues. Indeed, the return to common sense and good practices is not a simple thing for agribusiness. In IT, we start to see it with its consequences on the privacy of users (but we are only in the infancy).

It is important to reintroduce the user into the software design thinking (and not just by doing UX and marketing workshops …) We need to rethink everyone of the software: project management, the impacts of the software, quality … This is the goal of some movements: software craftmanship, software eco-design, accessibility … but the practices are far too confidential. Whose fault is it? We go back to the causes of the problem: we are pleased on one side (development) and we have a search only profit (management side). Convenient to build Kwai River bridges … where are the users (us, actually).

We kill our industry (and more)

We are going in the wrong way. The computer industry has already made mistakes in the 1970s with non-negligible impacts. The exclusion of women from IT is one of them. Not only has this been fatal for some industries but we can ask ourselves the question of how we can now address responses to only 50% of the IT population, with very low representativeness. The path is now difficult to find..

But the impact of the IT world does not stop there. The source and the model of a big part of the computing come from the Silicon valley. If Silicon Valley winners are left out, local people are facing rising prices, decommissioning, poverty … The book Mary Beth Meehan puts in image this:

“The flight to a virtual world whose net utility is still difficult to gauge, would coincide with the break-up of local communities and the difficulty of talking to each other. Nobody can say if Silicon Valley prefigures in miniature the world that is coming, not even Mary, who ends yet its work around the word “dystopia”.

In its drive towards technical progress, the software world is also creating its …environmental debt…

There are many examples, but the voices are still too weak. Maybe we will find the silver bullet, that the benefits of the software will erase its wrongs… nothing shows that for now, quite the contrary. Because it is difficult to criticize the world of software. As Mary Beth Meehan says:

“My work could just as easily be swept away or seen as leftist propaganda. I would like to think that by showing what we have decided to hide, we have served something, but I am not very confident. I do not think people who disagree with us in the first instance could change their minds. “

On the other hand, if there are more and more voices, and they come from people who know the software (developers, architects, testers …), the system can change. The developer is neither a craftsman nor a hero: he is just a kingpin of a world without meaning. So, it’s time to move…